Abstract

High school students often lack access to personalized and empathetic career guidance. This pilot study investigates whether a retrieval-augmented AI assistant can provide clearer, more relevant, and more empathetic career advice than baseline large language model prompting. A curated knowledge base was developed from diverse career resources, and the system was tested on 100 representative student queries. Outputs were automatically evaluated across six dimensions (clarity, relevance, empathy, faithfulness, answer relevance, and context relevance) using GPT-4 as a baseline evaluator, and complemented by human ratings from 25 randomly selected students in a 12th-grade class on three core metrics (clarity, relevance, empathy). Human ratings closely mirrored the automated evaluations, supporting GPT-4’s use as a proxy for rapid system assessment. Across both evaluation methods, the retrieval-augmented model consistently outperformed the baseline, with the largest gains observed in empathy. These findings suggest that retrieval-augmented generation can enhance AI-driven career guidance. The system is currently being piloted in over 20 schools across Kazakhstan, creating opportunities for larger-scale validation and continuous improvement.

Keywords: AI career guidance, large language models, retrieval-augmented generation, educational technology, student support

Introduction

Career decision-making is a critical developmental task for high-school students, yet access to personalized, reliable, and empathetic guidance remains limited in many education systems1. Reports documenting national systems demonstrate fragmented provision and inconsistent coordination of careers services in the United States, which reduces equitable access to counseling support2. Structural constraints, such as high counselor-to-student ratios, also hinder tailored advising, particularly in underfunded and rural schools3. Evidence suggests that well-designed, theory-driven career interventions can improve academic engagement and the transition to post-secondary pathways, but such programs are unevenly distributed4.

Classical career development frameworks explain why personalization and empathic framing matter for school-age users. Holland’s RIASEC model (Realistic, Investigative, Artistic, Social, Enterprising, Conventional) emphasizes person-environment fit as central to vocational choice5, Super’s life-span/life-space perspective foregrounds developmental timing and role salience in career decisions6, and Social Cognitive Career Theory identifies self-efficacy, outcome expectations, and contextual supports as mediators of choice7. Constructivist/narrative orientations (career construction) further argue that guidance should support meaning-making rather than merely listing options8.

Recent advances in artificial intelligence (AI) have opened opportunities to extend individualized supports beyond current counseling capacity, and policymakers are actively exploring AI’s role in teaching and student supports9. At the same time, scholars warn that deploying AI in K-12 contexts raises concrete ethical challenges — privacy, bias, transparency, and fairness — which must shape system design and evaluation10. Applied pilots and early demonstrations indicate AI can surface relevant occupations and resources at scale, and prototype career-support tools have shown promise in improving relevance and accessibility, though many studies remain limited in scope or methodological transparency11‘12.

One promising technical strategy is retrieval-augmented generation (RAG), in which generative large language models (LLMs) are explicitly grounded by retrieved, versioned documents from a curated knowledge base to improve factual grounding and provenance13‘14. Recent RAG tutorials and surveys document maturing toolchains and recommend evaluation along multiple axes (retrieval quality, faithfulness, context relevance, and user outcomes), which is directly relevant to advising tasks that mix factual guidance with empathic framing15‘16. Domain case studies (e.g., RAG in health risk assessment) show that retrieval grounding can increase specificity and reduce unsupported assertions in regulated settings — a useful analog for career guidance, where provenance and accuracy matter for student decisions17.

Nonetheless, large language models retain important failure modes: hallucination and factual drift remain documented risks for ungrounded generation18, and recent work shows that using LLMs as sole evaluators introduces judge-level biases and circularity that complicate the interpretation of automated scores19. Conversational agents can, however, produce empathic, process-oriented interactions in practice (e.g., mental-health chatbots), suggesting that a grounded, safety-aware RAG assistant might pair informational accuracy with supportive tone20.

Despite promising early pilots, current AI-based career guidance systems remain limited in scope, personalization, and empirical validation. Recent studies highlight that LLM-driven tools often struggle with domain-specific accuracy, context sensitivity, and the integration of multiple career resources21. Evaluations of AI career chatbots report variability in advice quality, with risks of overgeneralization and occasional misalignment with students’ interests22. Systematic reviews suggest that most existing solutions rely on static datasets, lacking mechanisms to incorporate up-to-date occupational information or localized labor market trends23. Furthermore, research emphasizes the need for rigorous user-centered evaluation frameworks, including both objective metrics and subjective perceptions of usefulness, clarity, and empathy24. These limitations collectively justify the development of retrieval-augmented approaches that aim to combine LLM generative capabilities with curated, dynamic knowledge bases to improve factual grounding and relevance in career guidance25.

The primary objective of this study is to evaluate whether integrating a curated knowledge base with an LLM via the RAG pipeline can enhance the relevance, clarity, and empathic quality of career guidance responses for high-school students, as measured by both automated six-metric evaluation and human ratings on three core dimensions. By addressing existing gaps in personalization, factual grounding, and dynamic content integration, this work aims to provide a scalable, ethical, and context-aware AI assistant that complements traditional counseling. The evaluation sample consisted of an entire high-school class, supporting the representativeness of student queries for typical counseling contexts.

Building on these objectives and methodological considerations, theoretical work on career development, recent evidence, and ethical reviews for K-12 AI, and methodological advances in RAG provide a clear rationale for a pilot evaluation of a retrieval-grounded AI assistant for high-school career guidance. This paper, therefore, presents a pilot study that (a) constructs a curated, versioned knowledge base for career advice, (b) integrates it with a generative assistant via the RAG pipeline, and (c) evaluates outputs using automated assessment across six metrics and human ratings on three core metrics (relevance, clarity, empathy), reporting strong agreement between the two evaluation modalities while documenting dataset curation and evaluator limitations. Notably, the system has already been deployed in over 20 schools across Kazakhstan, allowing for continuous data collection and iterative improvement beyond this initial study.

Methods

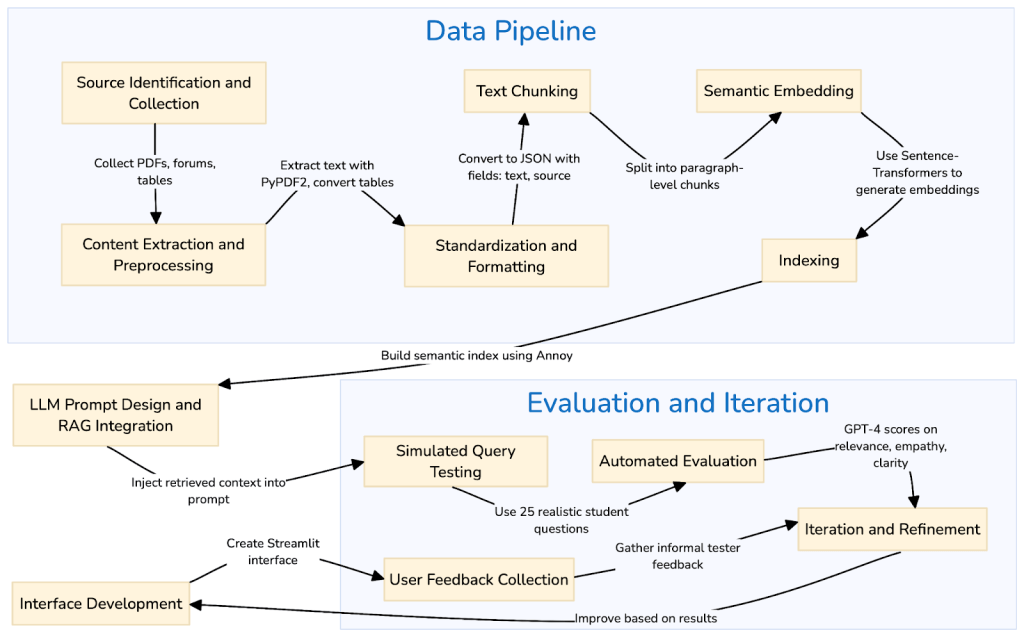

This study employed an applied systems engineering approach to design and evaluate a Retrieval-Augmented Generation (RAG)-based AI assistant intended for high school career guidance. Rather than using a traditional experimental or observational model, the project was structured around iterative system development, functional evaluation, and informal user testing to assess performance and usability. An overview of the process is shown in Figure 1.

Although the intended audience of the system is high school students, no formal participant recruitment was conducted during the development process. Instead, a set of 100 synthetic but realistic student queries was generated to simulate genuine interactions with the assistant. The queries were generated through a multi-step process: (1) reviewing existing literature on student career questions and counseling interactions, (2) categorizing themes such as academic choices, career aspirations, skills development, and emotional concerns, (3) balancing levels of complexity (from straightforward fact-seeking to open-ended dilemmas), and (4) embedding variations in emotional tone (neutral, anxious, uncertain, aspirational). This process was intended to ensure a representative coverage of the types of queries high-school students typically pose.

To build the retrieval component of the RAG system, a custom dataset was assembled from multiple heterogeneous sources. This included 20 PDF documents such as occupational handbooks and career exploration guides, along with six structured files in CSV or Excel format containing skill taxonomies and job requirements. Content was also drawn from career discussion forums. All sources were converted into a unified JSON format containing standardized “text” and “source” fields to ensure consistency across the retrieval process. PDF files were parsed using the PyPDF2 library (via the PdfReader class), and the resulting text was split into paragraph-based chunks, typically containing one to three paragraphs each. For structured data, relevant rows were converted into natural-language statements describing job attributes, such as: “A data scientist typically needs skills in Python, statistics, and machine learning.” Each text chunk was embedded using the sentence-transformer model all-MiniLM-L6-v2, and the resulting vectors were indexed using Annoy (v1.17.3) to support semantic similarity search. The pipeline was implemented in Python 3.11.11, with key dependencies including huggingface-hub (v0.33.1), sentence-transformers (v4.1.0), annoy (v1.17.3), and streamlit (v1.46.0), ensuring reproducibility of the environment.

The knowledge base was curated through a two-stage filtering process to enhance accuracy and reduce noise. First, only materials aligned with defined inclusion criteria — English-language publications (2015-2025) on career guidance, AI in counseling, RAG/LLM applications, ethics, personalization, and future skills — were retained. Second, within forum-derived content, non-informative or incomplete responses were removed, along with subjective or anecdotal statements containing phrases such as “in my experience,” “I remember,” “I tried,” “I used to,” or “when I was.” Entries with inappropriate or irrelevant language (e.g., profanity, slang like “lol,” or dismissive remarks such as “stupid” or “this sucks”) were also excluded. The remaining data was reviewed for thematic consistency and balanced coverage. While these steps significantly reduced subjectivity and low-quality inputs, potential biases persist due to the English-only scope and the disciplinary boundaries of the included sources.

The AI assistant was built using Meta’s LLaMA-3-8B-Instruct, selected because it offers a balance between model size and efficiency, is openly available for research purposes, and allows reproducibility without reliance on closed-source APIs. Larger models were not considered feasible due to resource constraints, while smaller models were judged insufficient for generating nuanced, empathetic responses. In future work, a comparative evaluation of multiple models of varying sizes is planned. A custom RAG pipeline was implemented to improve the quality and relevance of its responses. In this architecture, the student’s query is first embedded and matched to relevant data chunks via semantic search. The top retrieved passages are then appended to the system prompt and passed to the LLM for response generation. Prompt engineering played a key role in shaping the output, with few-shot prompting strategies used to reinforce clarity, empathy, and helpfulness. For evaluation purposes, three prompt variations were selected to test robustness: (1) concise directive prompts, (2) extended instructional prompts emphasizing empathy, and (3) formal procedural prompts. These were chosen to represent the main design dimensions of brevity, emotional framing, and rigorous guidance in LLM prompting. A Streamlit-based user interface was developed to provide a lightweight and browser-accessible experience for students. The interface allowed users to input open-ended queries and receive two parallel responses, one from the base model and another from the RAG-augmented version, for direct comparison.

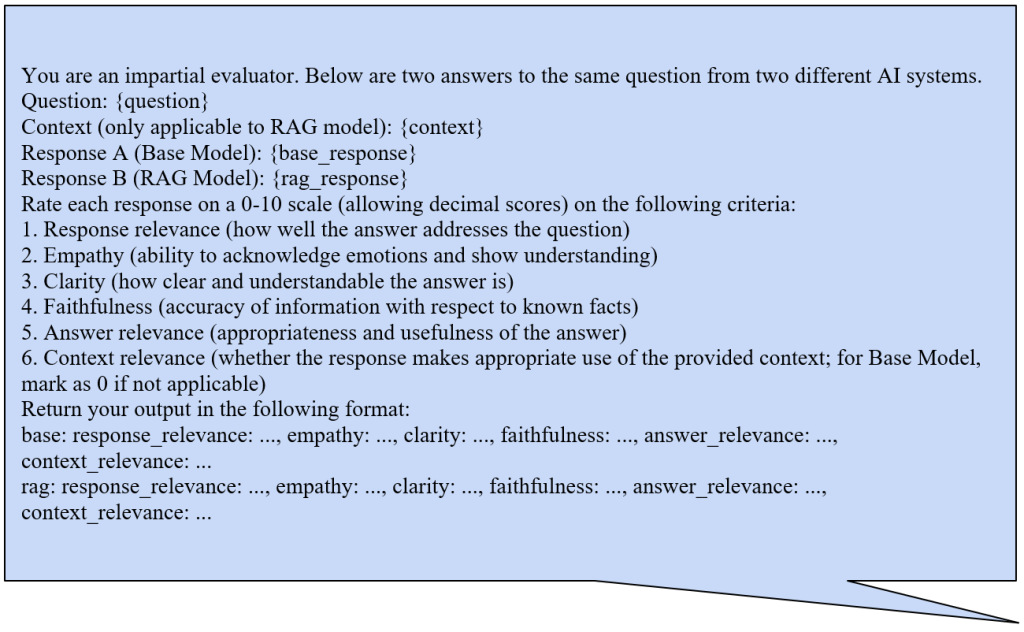

Evaluation of the system’s performance focused on six main dimensions: response relevance, empathy, clarity, faithfulness, answer relevance, and context relevance. Context relevance is primarily applicable to RAG outputs due to the explicit use of retrieved passages. For base model responses, it served as a control dimension and was usually rated low. For structured evaluation, the 100 synthetic student questions were submitted to both the base and RAG-enhanced models. Each output was rated independently by GPT-4 (Generative Pre-trained Transformer), used here as a baseline evaluator and proxy for rapid scoring, on a 0–10 scale (allowing decimal scores). GPT-4 was applied to enable relative comparisons between the base and RAG-enhanced models rather than to serve as a final arbiter of quality. To account for potential variability caused by prompt wording, the evaluation process was repeated three times using the variations described above. The three resulting datasets were first compared to assess scoring consistency across prompt variations by calculating the average standard deviation for each evaluation criterion and each model. For the primary analysis of model performance, scores were averaged across the three runs for each criterion, and these aggregated values were used to compare the base and RAG-enhanced models. While this design provides structured insight, it also introduces circularity since GPT-4 is itself a large language model evaluating another LLM. This limitation is explicitly acknowledged, and future iterations will complement automated scoring with expanded human-coded benchmarks and multi-rater evaluation schemes.

In addition to descriptive statistics (mean and standard deviation), we conducted paired-sample t-tests for each evaluation criterion to determine whether the observed differences between the base and RAG models were statistically significant. For each test, two-tailed p-values were calculated, and statistical significance was assessed at the α = 0.05 level. To complement significance testing, 95% confidence intervals were computed for mean differences. We also estimated effect sizes using Cohen’s d, providing an indication of the practical magnitude of improvements beyond numerical differences. For visualization, bar plots with error bars (95% CI) and boxplots were generated to illustrate central tendencies and variability.

| Prompt Version 2 | Prompt Version 3 |

| You are an impartial evaluator, but please conduct the evaluation with a balanced perspective that recognizes not only the logical correctness of the answers but also their emotional resonance with the user.Below are two answers to the same question from two different AI systems. Your task is to carefully analyze each response across multiple dimensions.Question: {question}Context (only applicable to RAG model): {context}Response A (Base Model): {base_response} Response B (RAG Model): {rag_response}Rate each dimension on a 0-10 scale (allowing decimal values), where 0 represents the lowest performance and 10 represents the highest.When rating, please consider: 1. Response relevance – how directly and thoroughly the response answers the user’s question. 2. Empathy – whether the response acknowledges the emotional or human dimension of the query, showing sensitivity and understanding. 3. Clarity – whether the response is clear, well-structured, and easy to understand. 4. Faithfulness – whether the response provides accurate and factually correct information. 5. Answer relevance – whether the response is genuinely helpful and contextually useful for the user. 6. Context relevance – whether the response uses the provided context appropriately (for Base Model, score as 0 if not applicable). Return your evaluation in this format: base: response_relevance: …, empathy: …, clarity: …, faithfulness: …, answer_relevance: …, context_relevance: … rag: response_relevance: …, empathy: …, clarity: …, faithfulness: …, answer_relevance: …, context_relevance: … | You are tasked with performing a structured evaluation of two AI-generated responses to the same question. Your role is to assess their quality in a rigorous and objective manner, following standardized criteria. Question: {question} Context (only applicable to RAG model): {context} Response A (Base Model): {base_response} Response B (RAG Model): {rag_response} Please assign a numerical score 0-10 (allowing decimal values) for each of the following six dimensions: 1. Response relevance – degree to which the response addresses the stated question. 2. Empathy – ability of the response to acknowledge user perspective and express understanding. 3. Clarity – readability, coherence, and precision of expression. 4. Faithfulness – factual correctness relative to established knowledge. 5. Answer relevance – overall usefulness and appropriateness of the response. 6. Context relevance – adequacy of contextual grounding (for Base Model, assign 0 as it lacks explicit context). Report your results in the following format: base: response_relevance: …, empathy: …, clarity: …, faithfulness: …, answer_relevance: …, context_relevance: … rag: response_relevance: …, empathy: …, clarity: …, faithfulness: …, answer_relevance: …, context_relevance: … |

In parallel, quantitative feedback was collected from 25 students from a randomly selected 12th-grade class. Each student rated both models on a 0–10 scale across three core criteria (response relevance, empathy, clarity). The entire class participated during a scheduled session, ensuring coverage without self-selection bias; participation was anonymous and framed as low-stakes to avoid any perception of pressure. Students also provided informal qualitative feedback on the prototype interface, mainly suggesting ways to make the comparison between the two answers clearer. Only grade level was recorded; no further demographic information was collected. As the project did not qualify as human subjects research under regulatory definitions, no institutional review board (IRB) approval was required. While the small sample size limits generalizability, the pilot still offers exploratory insights. Human and automated evaluations were compared by first calculating mean scores for Base and RAG outputs on each metric and then computing the gain (Δ) from Base to RAG. These aggregated gains were then compared across evaluators, enabling an analysis of whether humans and GPT-4 as a proxy captured similar improvement patterns.

Results

Overall performance: means and confidence intervals

Aggregated means and 95% confidence intervals for all six evaluation metrics are shown in Figure 3. Across the board, RAG consistently outperforms the Base system. For response relevance, the Base model achieved a mean of 7.97 (95% CI [7.93, 8.02]), while RAG reached 8.86 (95% CI [8.74, 8.97]). For empathy, Base averaged 7.21 (95% CI [7.13, 7.30]) compared to 8.41 (95% CI [8.31, 8.50]) for RAG. On clarity, Base scored 8.32 (95% CI [8.27, 8.36]) versus 8.94 (95% CI [8.86, 9.02]) for RAG. Faithfulness improved from 8.83 (95% CI [8.77, 8.89]) in Base to 9.43 (95% CI [9.32, 9.54]) in RAG. For answer relevance, Base stood at 7.97 (95% CI [7.92, 8.03]) and RAG at 8.90 (95% CI [8.79, 9.01]). The overall average followed the same pattern: 8.06 (95% CI [8.02, 8.11]) for Base versus 8.77 (95% CI [8.67, 8.87]) for RAG. The confidence intervals between the two systems either show little overlap or place RAG distinctly higher.

Statistical significance and effect sizes

| Metric | Base mean | RAG mean | Mean diff (RAG−Base) | t-statistic | p-value | Cohen’s d |

| Response relevance | 7.97 | 8.86 | 0.88 | 18.33 | 1.35e-33 | 1.83 |

| Empathy | 7.21 | 8.41 | 1.19 | 26.50 | 9.47e-47 | 2.65 |

| Clarity | 8.32 | 8.94 | 0.62 | 14.07 | 2.31e-25 | 1.41 |

| Faithfulness | 8.83 | 9.43 | 0.60 | 12.41 | 6.79e-22 | 1.24 |

| Answer relevance | 7.97 | 8.90 | 0.93 | 19.76 | 3.86e-36 | 1.98 |

| Average | 8.06 | 8.77 | 0.71 | 15.58 | 3.23e-28 | 1.56 |

Pairwise t-tests were conducted for all six metrics on the same set of 100 queries (Base vs. RAG). All metrics showed statistically significant improvements in favor of RAG after FDR correction (Table 2). For example, empathy improved by +1.19 points (t = 26.50, p ≈ 9.47e-47), and response relevance by +0.88 points (t = 18.33, p ≈ 1.35e-33).

Effect sizes were large by conventional standards: Cohen’s d = 2.65 for empathy, 1.83 for response relevance, 1.41 for clarity, 1.98 for answer relevance, 1.24 for faithfulness, and 1.56 for the overall average. Beyond statistical significance, these effect sizes indicate practically meaningful differences. For instance, a +1.19 gain in empathy with d = 2.65 suggests a substantial shift relative to the variability of responses, making it highly likely to be perceptible to end users.

Distributions and variability

The distributions for Base and RAG are visualized in Figure 2. RAG responses consistently have higher medians and upper quartiles, particularly for faithfulness and clarity. Standard deviations are slightly larger for RAG, often due to a longer upper tail, suggesting occasional very high scores.

Retrieval noise and context relevance

| Metric | Pearson r | p-value |

| Response relevance | 0.50 | 1.21e-07 |

| Empathy | 0.35 | 2.97e-04 |

| Clarity | 0.35 | 3.95e-04 |

| Faithfulness | 0.38 | 8.37e-05 |

| Answer relevance | 0.47 | 7.88e-07 |

| Average | 0.72 | 2.52e-17 |

To examine how retrieval quality affects performance, we analyzed correlations between context relevance and the deltas (RAG − Base) across metrics (Table 3, Figure 5). The associations were moderate to strong and statistically significant: r = 0.72 for the overall average (p ≈ 2.5e-17), r = 0.50 for response relevance, r = 0.47 for answer relevance, r = 0.38 for faithfulness, r = 0.35 for empathy, and r = 0.35 for clarity.

These results indicate that when retrieved passages are highly relevant, the gains from RAG are amplified; when the retrieved context is weak or irrelevant, the advantage diminishes or can even turn negative. This illustrates the mechanism of retrieval noise, whereby low-quality retrieval undermines the added value of RAG. A binned analysis further confirmed that performance gains increase with higher context relevance scores, with the strongest improvements observed in the 8–10 relevance range.

One limitation is that context relevance itself was measured via judgment scores; while correlations highlight an association, they do not prove causation. Nonetheless, the findings underscore the critical role of retrieval quality in the success of RAG systems.

Low-context cases

The number of very low-context instances was small. Most metrics had residual counts close to n = 1 in the lowest bins, rendering those statistics unstable. Thus, conclusions about RAG behavior in extremely low-context settings should be treated cautiously.

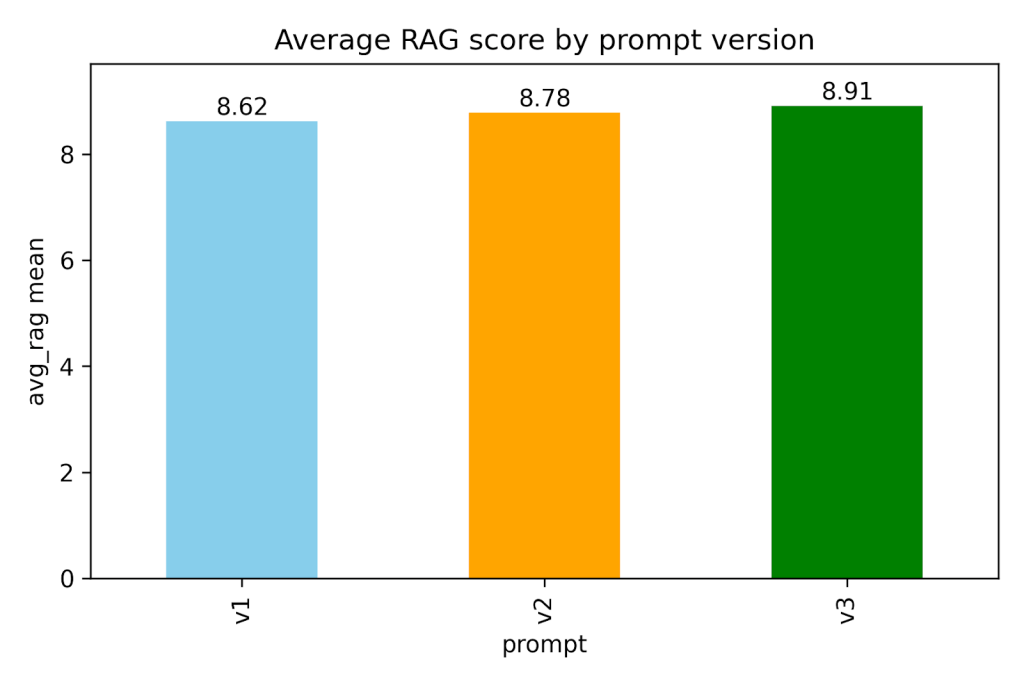

Prompt variations

Robustness to prompt design was evaluated across three prompt versions (Figure 6). The mean overall RAG scores were 8.62 (v1), 8.78 (v2), and 8.91 (v3). Pairwise t-tests revealed significant differences after FDR correction, particularly between v1 and v3 across most metrics (response relevance, empathy, clarity, faithfulness, answer relevance, overall average). Comparisons between v1 and v2 showed significant differences mainly in faithfulness, while v2 vs. v3 showed partial significance (e.g., in response relevance and clarity). These findings suggest that prompt wording materially influences RAG performance, with v3 producing the strongest results.

Human evaluation comparison

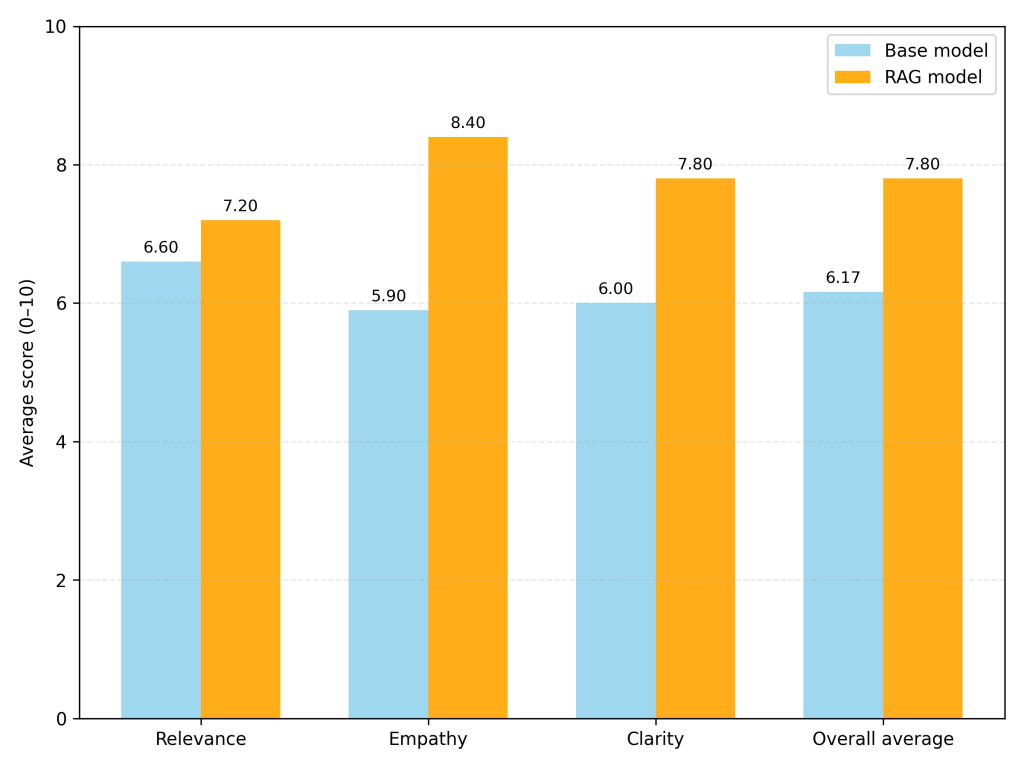

In addition to automated LLM-as-judge assessments, we conducted a small-scale human evaluation on ten items, covering relevance, empathy, and clarity (Figure 7). The results show consistent improvements from Base to RAG, though the absolute scores are somewhat lower than those assigned by the automated evaluator. On average, humans rated the Base system at 6.17 and RAG at 7.80 across the three metrics.

When comparing the gains from Base to RAG, the human ratings showed larger absolute improvements but followed the same relative pattern as the automated scores. Specifically, humans reported an average increase of +2.5 points for empathy, +1.8 for clarity, and +0.6 for relevance, whereas GPT-4 as a proxy evaluator indicated smaller but directionally consistent gains of +1.2, +0.62, and +0.89, respectively. In relative terms, the human-perceived gain was about 2.1× higher for empathy, 2.9× higher for clarity, and slightly lower (0.7×) for relevance compared to GPT-4’s estimates. This suggests that while GPT-4 reliably captures the overall trend of improvements, it may underestimate the perceived gains in affective and stylistic dimensions (empathy, clarity) and slightly overestimate factual relevance. Together, these results support the use of GPT-4 as a baseline evaluator for rapid iteration while highlighting the added value of incorporating human feedback for nuanced, user-centered quality assessment.

Summary

The results show that RAG yields higher scores than the Base system across all evaluated metrics, with observed mean differences ranging from 0.62 to 1.19 points on the 0–10 scale. Pairwise t-tests indicate that these differences are statistically significant after FDR correction, and Cohen’s d values suggest large effect sizes for several metrics, particularly empathy and response relevance. Variability in the data is notable, and higher context relevance generally corresponds to greater gains, suggesting that the effectiveness of RAG depends on the quality of retrieved information. Differences across prompt versions indicate that system outputs are sensitive to prompt formulation. Human evaluation, while limited in sample size, shows a similar pattern of improvement, albeit with lower absolute scores. Overall, the data provide evidence of measurable differences between Base and RAG outputs, while highlighting important moderating factors such as context quality and prompt design.

Discussion

This study investigated the impact of augmenting a base large language model with retrieval-augmented generation (RAG) on AI-generated career guidance for high school students. Overall, RAG outputs scored higher than the Base system across all evaluated metrics, with statistically significant mean differences confirmed through paired t-tests (Table 2). Effect sizes (Cohen’s d) were large for empathy and response relevance, indicating that the observed differences are not only measurable but also notable relative to response variability. Importantly, the magnitude of improvements was largest for empathy, followed by clarity and relevance, mirroring the automated results and supporting the use of GPT-4 as a rapid proxy evaluator. Still, human ratings were between 1.5× and 2× larger than GPT-4’s estimates, particularly for empathy, suggesting that students perceived RAG responses as even more empathic and clear than automated scores alone might imply.

The results suggest that the benefits of RAG are context-dependent. Correlation analyses show that higher context relevance generally corresponds to larger gains, while low-quality retrieval can reduce or even negate improvements. This highlights the impact of “retrieval noise”: irrelevant or low-quality passages limit RAG’s effectiveness, emphasizing the need for robust document filtering and reranking. Variations in prompt formulation also produced measurable performance differences, particularly between the least and most effective versions (v1 vs. v3), underscoring the sensitivity of outputs to prompt design.

Human evaluation, though aligned in trend with automated assessments, is limited by a small and relatively homogeneous rater pool (n = 10). These results should therefore be interpreted as pilot findings rather than definitive conclusions. Nevertheless, the strong parallelism between human and GPT-4 ratings supports the use of LLM-as-judge methods for rapid iteration and prototyping, provided that final decisions about system quality rely on diverse human raters and expert reviewers such as school counselors.

Theoretical implications are modest but notable. Document-level grounding appears to reduce hallucinations and generic responses by providing concrete context; however, the practical impact is strongest in queries that are open-ended, complex, or emotionally nuanced. Short factual questions show smaller or negligible differences, suggesting that RAG is most valuable in situations requiring contextualization rather than mere retrieval of straightforward facts.

From a practical standpoint, the project’s real-world deployment across 20+ schools creates a natural opportunity to collect richer interaction data and improve system performance iteratively. Observed dispersion patterns—higher variance in clarity and response relevance under RAG, with more consistent empathy ratings—indicate that monitoring quality at scale will be important to maintain a reliable user experience.

Limitations are substantial and highlight avenues for future research. The current evaluation relied partly on synthetic or pilot queries and a small, informal human evaluator pool, limiting generalizability. Using a single LLM as an evaluator introduces potential bias, raising concerns about fairness and reproducibility. Future work should systematically examine retrieval quality under controlled conditions, evaluate prompt design across multiple contexts, and expand evaluator diversity to include different regions, school types, age groups, and professional counselors. Evaluations should also consider multiple LLM evaluators of varying architectures, which may yield different sensitivity profiles. Technically, expanding the RAG system’s knowledge base—especially regarding international and Kazakhstan-specific university information—could reduce retrieval noise and improve overall performance. Importantly, the system is deployed in a human-in-the-loop setting, where AI provides preliminary guidance but final recommendations are reviewed and contextualized by educators or mentors. This design helps mitigate risks of over-reliance and aligns with best practices for responsible educational AI deployment.

Finally, ongoing pilot deployments are accompanied by careful ethical oversight, consent processes, and bias monitoring to ensure that RAG assists rather than replaces human counselors. Lessons learned from these pilots will inform scaling strategies, interface refinements, and future controlled evaluations to strengthen both fairness and reliability.

In conclusion, RAG substantially improves the empathy, clarity, and contextual relevance of AI-generated career guidance, particularly for complex and emotionally nuanced queries. These findings underscore the value of combining retrieval with generative models to deliver more supportive and context-aware educational tools. Continued monitoring, ethical safeguards, and iterative optimization will be key to sustaining impact as the system expands to reach more students.

Appendix

100 Synthetic Questions About Career Choices and Educational Paths:

- I’m interested in both technology and psychology. Is there a career that combines these two fields, and what should I study in university to get there?

- How do I know if I should become a doctor or not?

- What’s the difference between computer engineering and computer science?

- I’m passionate about helping people and also really enjoy biology, but I get nervous easily and don’t like working under too much pressure. Does that mean medicine might not be right for me, or are there calmer healthcare roles I should consider?

- I’m good at math but I don’t enjoy it. Should I still consider studying something like engineering or finance?

- I’m considering a career in AI, but I’m not sure which exact path to choose — research, ethics, or development. Can you help me understand the pros and cons of each?

- My parents want me to become a lawyer, but I’m more interested in animation or creative work. How do I convince them or balance both paths?

- I’m very introverted and prefer working alone. What careers might suit someone like me?

- I get easily bored by routine and love solving puzzles and thinking outside the box. Are there any careers that involve constant change and creativity?

- I’m not confident in my abilities, even though others say I’m smart. How can I choose a career path if I constantly doubt myself?

- I’m good at public speaking and organizing people. What could that mean for my future career?

- I like observing people’s behavior and often notice patterns others miss. Does this relate to any career fields?

- I get very emotional when I see injustice or unfair treatment. Could this sensitivity be used in a job somehow, or is it a weakness?

- I love chemistry but don’t want to become a chemist. What else could I do with it?

- Math and literature are my strongest subjects. Is there a profession that involves both analytical and creative thinking?

- I struggle with memorizing facts but do well with hands-on learning. What kind of jobs value that kind of intelligence?

- What jobs use physics besides engineering?

- I enjoy writing stories and analyzing books. Could this lead to a practical career?

- I feel overwhelmed by all the options out there. How do I start narrowing them down?

- I keep changing my mind about what I want to do. Is that normal, and what should I do about it?

- I thought I wanted to become a scientist, but now I’m unsure because I don’t like working in a lab. What alternatives are there that still use scientific thinking?

- What if I choose the wrong career and regret it later?

- I want to make a difference in the world, but I’m not sure how. Does that help in choosing a direction?

- I have so many different interests that I’m afraid of committing to just one. How can I make a choice without losing the rest?

- How important are grades when choosing a career? Can someone with average grades still succeed?

- I like drawing, but people tell me art isn’t practical. Are there careers where I can actually use artistic skills?

- How do I know if I should go to university or take a different path like vocational training?

- I love animals, but I don’t want to become a vet. What other animal-related careers are out there?

- I’m scared of choosing something too niche and not finding a job later. How do I balance passion and practicality?

- What careers are growing in the future because of technology changes?

- I care about the environment. What are some green careers I could explore?

- How do I know if I’m choosing a career for myself or just because of pressure from others?

- Are there careers where I can combine science and communication?

- I often get anxious when thinking about the future. How do I make career planning less stressful?

- How do I know if I’m creative enough for a design-related field?

- I want to help people with mental health struggles. Do I have to become a psychologist, or are there other roles?

- How do I build confidence when I’m not sure what I’m good at yet?

- I’m passionate about social justice. What kinds of jobs allow me to work on that?

- I like video games. Does that mean I should try to work in game design, or is that unrealistic?

- What are careers where I don’t need to sit at a desk all day?

- I’m afraid of disappointing my family if I choose a different path. How do I deal with that?

- What if I fail at my first career choice? Is it easy to change later?

- I enjoy debating and analyzing arguments. Does that mean law is a good fit?

- How do I know if I should prioritize money or passion when making a choice?

- I like working with kids. Are there options besides teaching?

- I don’t want to be stuck in one place my whole life. What careers are global?

- What if I don’t feel passionate about anything yet?

- How can I explore career options if I don’t have connections?

- I’m good at sports, but I don’t think I can go pro. What other careers are linked to athletics?

- How do I choose a career when I keep comparing myself to others?

- Is it normal to feel jealous of friends who already know what they want?

- I’m afraid of making the wrong choice and wasting years. How do I avoid that?

- What careers allow me to combine math with creativity?

- I like fixing things and building. Does that mean engineering is right for me?

- I want to work with technology but not just coding. What else is there?

- How do I handle pressure from teachers who expect me to go into science?

- I enjoy working in teams, but I also want independence. Is there a career that balances both?

- I don’t enjoy science, but I want to help people. What are my options?

- Is it bad if I choose something just because it seems easy?

- What jobs involve storytelling besides being a writer?

- How do I balance hobbies with career choices?

- Can someone introverted still succeed in leadership roles?

- What careers involve a lot of creativity but also stability?

- I want to travel and experience different cultures. What are global career paths?

- I like solving mysteries and analyzing clues. Is that only for detectives?

- What careers exist at the intersection of art and science?

- How do I choose between something practical and something I love?

- I feel like I’m not talented enough for the careers I want. What should I do?

- I’m worried about AI taking jobs. How do I choose a safe career?

- I enjoy helping others solve problems. What kind of jobs value that?

- How important is passion versus skill when choosing?

- What if I change my mind halfway through university?

- How do I manage career stress when I already feel overwhelmed by school?

- Are gap years useful before deciding on a career?

- I like planning events. Could that be a real career?

- What are jobs where I can combine travel and writing?

- I’m afraid of public speaking. Does that mean I should avoid certain careers?

- I’m curious about entrepreneurship but scared of failing. Should I try?

- What are jobs that focus on making society fairer?

- I’m inspired by doctors but afraid of blood. What other healthcare paths exist?

- I enjoy learning languages. What jobs actually use that skill?

- I like tutoring classmates. Could that mean teaching is right for me?

- How do I stay motivated to pursue a long career path?

- I want to work in science but also make discoveries that help people. Where should I start?

- I’m afraid of burnout. How do I choose a career that won’t drain me?

- I don’t want to compete all the time. What careers are less competitive?

- What careers let me design or build things without needing advanced math?

- I enjoy photography. Is there a stable way to make it into a career?

- I feel pressure to choose something now, but I’m not ready. Is that normal?

- What if my dream career doesn’t exist yet?

- How can I tell if I’ll actually enjoy a job in real life?

- What are ways to combine business with helping people?

- I’m interested in space. Do I have to be an astronaut, or are there other roles?

- I like technology but also want to help communities. What options combine both?

- I worry about being stuck in the wrong career forever. How flexible are career paths?

- How do I balance financial security with personal happiness in career choice?

- I want to build something meaningful in life, but I don’t know what. How do I start?

- Can a person succeed in a career if they aren’t naturally talented but work very hard?

- What if I don’t find any career that excites me?

- How do I choose when I’m afraid of missing out on better options?

References

- Holt-White, E., Montacute, R., & Tibbs, L. (2022). Paving the way: Careers guidance in secondary schools. The Sutton Trust. https://www.suttontrust.com/wp-content/uploads/2022/03/Paving-the-Way.pdf. [↩]

- Zahrebniuk, Y. (2023). The system of career guidance for high school students in the United States of America. Educational Challenges, 28(1), 188-198. https://educationalchallenges.org.ua/index.php/education_challenges/article/view/193. [↩]

- Amoah, S. A., Kwofie, I., & Kwofie, F. A. A. (2015). The school counsellor and students’ career choice in high school: The assessor’s perspective in a Ghanaian case. Journal of Education and Practice, 6(23), 93-98. https://files.eric.ed.gov/fulltext/EJ1079015.pdf. [↩]

- Choi, Y., Kim, J., & Kim, S. (2015). Career development and school success in adolescents: The role of career interventions. Education, 63(2). https://www.researchgate.net/publication/277725574. [↩]

- Holland, J. L. (1997). Making vocational choices: A theory of vocational personalities and work environments (3rd ed.). Psychological Assessment Resources. [↩]

- Super, D. E. (1980). A life-span, life-space approach to career development. Journal of Vocational Behavior, 16, 282-298. [↩]

- Lent, R. W., Brown, S. D., & Hackett, G. (1994). Toward a unifying social cognitive theory of career and academic interest, choice, and performance. Journal of Vocational Behavior, 45, 79-122. [↩]

- Savickas, M. L. (2005). Career construction theory and practice. In S. D. Brown & R. W. Lent (Eds.), Career development and counseling: Putting theory and research to work (pp. 42-70). John Wiley & Sons. [↩]

- U.S. Department of Education, Office of Educational Technology. (2023). Artificial intelligence and the future of teaching and learning: Insights and recommendations. https://www.ed.gov/sites/ed/files/documents/ai-report/ai-report.pdf. [↩]

- Gouseti, A., James, F., Fallin, L., & Burden, K. (2024). The ethics of using AI in K-12 education: A systematic literature review. Technology, Pedagogy and Education. https://doi.org/10.1080/1475939X.2024.2428601. [↩]

- Kaldybaeva, U. (2025). Using AI in career guidance for students. Eurasian Science Review, 2(Special Issue), 2211-2222. https://eurasia-science.org/index.php/pub/article/view/310. [↩]

- Muhammad, R., Patriana, P., Yusrain, Y., Astaman, A., & Manja, M. (2024). Counselling career with artificial intelligence: A systematic review. GUIDENA: Jurnal Ilmu Pendidikan, Psikologi, Bimbingan dan Konseling. https://pdfs.semanticscholar.org/d292/5cd666cd4f1184eeecdc23e334061447dbd2.pdf?utm_source=chatgpt.com. [↩]

- Prabhune, S., & Berndt, D. J. (2024). Deploying large language models with retrieval augmented generation. arXiv preprint arXiv:2411.11895. https://arxiv.org/abs/2411.11895. [↩]

- Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W.-t., Rocktäschel, T., Riedel, S., & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. NeurIPS / arXiv. https://arxiv.org/abs/2005.11401. [↩]

- Wu, S., Xiong, Y., Cui, Y., et al. (2024). Retrieval-Augmented Generation for Natural Language Processing: A Survey. arXiv. https://arxiv.org/abs/2407.13193. [↩]

- Yu, H., Gan, A., Zhang, K., Tong, S., Liu, Q., & Liu, Z. (2024). Evaluation of retrieval-augmented generation: A survey. arXiv. https://arxiv.org/abs/2405.07437. [↩]

- Meng, W., Li, Y., Chen, L., & Dong, Z. (2025). Using retrieval-augmented generation to improve question-answering in human health risk assessment: Development and application. Electronics, 14(2), 386. https://doi.org/10.3390/electronics14020386. [↩]

- Ji, Z., Lee, N., Frieske, R., et al. (2022). Survey of hallucination in natural language generation. ACM Computing Surveys / arXiv. https://arxiv.org/abs/2202.03629. [↩]

- Pangakis, A., et al. (2024). Humans or LLMs as the judge? A study on judgement bias. EMNLP / ACLAnthology. https://aclanthology.org/2024.emnlp-main.474. [↩]

- Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering cognitive behavior therapy to young adults using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Mental Health, 4(2), e19. https://pmc.ncbi.nlm.nih.gov/articles/PMC5478797/. [↩]

- Kumar, A., Sharma, S., & Mehta, P. (2024). Limitations of large language models in educational guidance: Accuracy and context challenges. Computers & Education, 196, 104738. https://doi.org/10.1016/j.compedu.2023.104738. [↩]

- Li, X., Wang, Y., & Chen, H. (2023). Evaluating AI-powered career counseling chatbots: Effectiveness and user alignment. International Journal of Artificial Intelligence in Education, 33, 1124-1145. https://doi.org/10.1007/s40593-023-00350-9. [↩]

- Smith, R., & Patel, J. (2024). Dynamic datasets in AI career guidance: Opportunities and gaps. Journal of Career Development and Technology, 11(2), 55-72. https://doi.org/10.1177/0894845324123456. [↩]

- Nguyen, T., & Brown, S. (2025). User-centered evaluation of AI in career counseling: Metrics and methodologies. Educational Technology Research and Development, 73, 431-450. https://doi.org/10.1007/s11423-024-10245-8. [↩]

- Zhao, L., & Liu, K. (2025). Retrieval-augmented LLMs for career guidance: Integrating generative models with curated knowledge bases. Journal of AI in Education, 5(1), 77-95. https://doi.org/10.1016/j.jaie.2025.01.004. [↩]