Abstract

This research examines the influence of a generative AI tutor, MindPath, on middle school students’ learning achievements, interest, and persistence in STEM learning. Students were assigned to experimental and control groups, with the former engaging with MindPath via scaffolded, inquiry-based conversations and the latter working with static textbook content. The experimental group students showed a notable improvement in performance, with their mean scores increasing from 11.33 to 15.76 out of 20 (39% gain; d = 1.33). Retention scores (mean = 15.31) were also high four days after testing, reflecting continued understanding. The control group exhibited minimal gains and lower retention. Discourse analysis showed that MindPath involved students in longer conversations (mean of 26.4 messages per session, 84.7 words per message), including Socratic questioning and real-time scaffolding consistent with active and elaborative learning modes of the ICAP framework. Survey data also indicated high levels of perceived engagement and satisfaction. These findings indicate the promise of conversational AI to facilitate greater persistence and deeper learning, especially for students who have lower prior knowledge initially. More research is required to generalize outcomes, but this research indicates that well-designed AI tutors can augment traditional instruction by offering individualized and interactive learning experiences.

Keywords: Generative AI tutor, STEM education, inquiry-based learning, student engagement, learning persistence

Introduction

The application of artificial intelligence (AI) technologies in educational systems has gained popularity over the past few years, particularly with the development of large language models (LLMs)1. Generative AI technologies are relevant to education because they have the potential to deliver personal instruction at scale and on demand, most notably in science, technology, engineering, and mathematics (STEM) subjects2. There is an urgent need to assess not just the functionality but also the pedagogic efficacy of such systems.

The effectiveness of intelligent tutoring systems (ITS) has been studied early on, with studies suggesting that computer-aided tutoring can rival human teaching in some cases2. A meta-analysis found that well-implemented ITSs, particularly for structured subjects, are capable of improving learning outcomes, often approaching the results of human tutors3. These systems, however, do not have the ability to flexibly adjust to learners’ inputs. This inability has motivated development of generative AI tutors that are capable of adaptive conversation and teaching. Recent work has introduced the Conversation-Based Math Tutoring Accuracy (CoMTA) dataset aimed at assessing the performance of LLMs on dynamic math problem-solving within a conversational framework4. Their findings highlight a persistent gap between LLM-generated explanations and pedagogical best practices: many responses appear plausible but contain subtle mathematical errors. This raises concerns about the reliability of AI tutors in high-stakes learning contexts.

One approach to target this gap is by using guided, inquiry-based learning models. One such model is the ICAP framework, which distinguishes learning by levels of cognitive engagement: passive, active, constructive, and interactive5. The latter two are linked to deeper learning. Typical AI tutors engage learners with only passive or active engagement (e.g., responding to factual questions). Conversely, a dialogue-based tutor, which encourages students to respond to open-ended queries, think, and follow stepwise learning, can try to achieve constructive and interactive levels of participation. This study focuses on assessing MindPath, a STEM AI tutor developed by me that has been made on the same principles. This model must be empirically validated before drawing strong conclusions about its efficacy.

The value of interaction quality is reinforced in the Community of Inquiry (CoI) model, which stresses the role of cognitive, social, and teaching presence in online learning communities6. A study suggests that AI systems can support such environments by enabling customized instruction and presence through conversation7. However, it is uncertain how far AI can actually imitate “teaching presence,” the instructor’s capacity to monitor, adapt, and intervene. MindPath tries to mimic this by varying lesson phases and customizing quizzes according to responses from students.

Another significant contribution is the Marketing GenAI Tutor Experiment, which examined how the use of a generative AI tutor impacted university students’ interest, self-efficacy, and test performance8. According to their research, students exposed to the AI tutor demonstrated higher motivation and modestly better test scores, especially when the tutor was tailored to the specific course context. This validates the promise of domain-specific generative tutors but also underscores that success is contingent on adapting to learner needs.

Other scholars have investigated the ethical and methodological questions focused on the use of AI in education. One study warns that AI tutors, while capable of delivering educational convenience, may foster shallow learning or an over-dependence on external tools9. Without appropriate scaffolding and opportunities for reflection, students might start viewing AI as a shortcut instead of a collaborator in the learning process. This particular concern is critical for LLM-based systems that, by design, prioritize fluency. A study involved an experimental group with ChatGPT and a control group with textbooks10. Those with ChatGPT had a 48% higher performance than the control group. However, when the experimental group was given a second test where access to ChatGPT was removed, they performed 17% lower than the control group. This suggests a possible dependency effect that could undermine independent thought if not carefully managed. In addition, this reinforces the need for tools to be developed that promote learning autonomy instead of mere response retrieval.

Finally, very few studies explore how generative AI performs for younger students (grades 5–9), a demographic with different cognitive and emotional needs compared to university learners. The majority of AI tutor research seems to focus on higher education and adult learning spaces, which leaves a critical gap in understanding the impact these technologies have on teenage learners.

MindPath was designed specifically to address this gap by integrating phased instruction, Socratic dialogue, comprehension checks, and adaptive quizzes for STEM learners. This study focuses on evaluating MindPath. The study incorporates both experimental and control groups, pre- and post-testing, and retention testing to assess whether structured AI interactions produce meaningful learning gains in comparison to traditional resources like textbooks. It also evaluates discourse quality through AI message analysis, aligned with best practices from a study which emphasizes categorizing messages by cognitive demand11.

This research contributes to the field by offering a replicable model for structured, conversational AI tutoring based on cognitive learning theories. Its significance stems from offering a controlled, multi-phase evaluation of a pedagogically designed, age-specific AI tutor, and providing new insights into how engagement, interactivity, and retention are impacted by generative AI systems. It expands the scope of AI technologies to a younger, more under-researched group (grade 5–9), providing developers and educators actionable strategies to guide the use of such technologies.

This study aims to (1) develop an AI tutoring system that uses phased instruction and interactive dialogue, (2) evaluate its impact on student engagement and test performance compared to a textbook-based control group, (3) analyze the nature and quality of AI–student dialogue using message coding frameworks, and (4) measure retention over time to assess long-term learning effects. The research is guided by the following question: To what extent does the integration of phased lesson progression and inquiry-based dialogue into a generative AI tutor improve student engagement and performance?

This research tests the effectiveness of MindPath, an AI tutoring chatbot that is specifically built to educate middle school students on topics of STEM in a phased, inquiry-based way. The research uses two groups (control and experimental) of 30 students each from grades 5 to 9, who were randomly assigned age-appropriate STEM topics and tested with three medium-difficulty quizzes (pre-test, post-test, and delayed retention test). The scope is to analyze learning outcomes, levels of engagement, and AI–student interactions through quantitative and qualitative data. Although the sample size is modest and discourse analysis draws on only 90 messages, these were design choices driven by feasibility constraints and validated with rubrics and content review. The research had experimental controls (randomized topic assignment, delayed testing without notice of dates, standardized quiz difficulty, and a fixed interaction time) to maximize reliability. However, despite attempting to prevent external studying between the post and retention tests, complete experimental control cannot be guaranteed for it. Nevertheless, by combining rigor with practical application, the study presents a valuable contribution to knowing how generative AI can facilitate STEM learning.

This study employed a mixed-methods approach to evaluate the effectiveness of MindPath, an AI-based tutoring chatbot designed for phased, dialogue-driven instruction. Participants were 30 students from grades 5 to 9, randomly assigned STEM topics appropriate to their grade level. The experimental group engaged with MindPath to learn the assigned topic, while the control group studied the same topic using textbooks. All students received a pre-test, a post-test following the learning period, and a retention test four days after the post-test. Quantitative information, including test scores, chatbot message statistics, and survey answers, was gathered through a specially created website combined with Supabase. Qualitative information was achieved by using a rubric to categorize discourse features like scaffolding, Socratic questioning, and feedback for three randomly chosen AI messages per student. Surveys gauged student attitudes toward engagement and usability. The research design prioritizes both learning outcomes and quality of interaction, enabling an overarching assessment of generative AI’s contribution to STEM learning.

Methodology

This study assessed the effectiveness of a generative AI tutor, ![]() , by measuring changes in test scores and student engagement after a single learning session. The evaluation compared pre-test and post-test scores, followed by a delayed-retention test four days later. The study used a mixed-methods design that combined quantitative measures (scores, surveys) with qualitative analysis (chat discourse analysis and feedback).

, by measuring changes in test scores and student engagement after a single learning session. The evaluation compared pre-test and post-test scores, followed by a delayed-retention test four days later. The study used a mixed-methods design that combined quantitative measures (scores, surveys) with qualitative analysis (chat discourse analysis and feedback).

Participants

The study involved 60 students from Grades 5–9, aged approximately 10–15. The students were divided into two groups: an experimental group (n=30) and a control group (n=30). Each group included six students from each grade level, ensuring equal representation across grades. The students were not selected based on prior academic performance and participation was voluntary. All data collection maintained anonymity—no personally identifying information was collected. Sessions were run in small grade-wise groups of 12 (6 in control and 6 in experimental) to ensure consistency and manageability.

Data Collection

Test Scores

Both the experimental and control group students were given an age-appropriate STEM topic vetted by a STEM teacher and were then presented with a 20-mark test of medium difficulty. After this test, students were asked to review their answers. The experimental group then received feedback and explanations from the AI tutor (MindPath) while the control group was asked to review the answers from their textbooks. Next, the experimental group was asked to study with the chatbot for a fixed 25 minutes, while the control group also studied using textbooks. Afterwards, both groups were asked to take another 20-mark test on the same topic with the same medium difficulty. Four days later, both groups took a delayed retention test, again on the same topic and with medium difficulty. All the websites were connected to Supabase, so all data was collected through it.

The quizzes were generated entirely by MindPath and no question repeated. Students could select difficulty—easy, medium, or hard—based on an item type pattern set before the “Generate a quiz” step. It also allowed for uniform distribution across the questions in terms of cognitive level too. This is outlined below:

A typical breakdown of the cognitive levels targeted in the quiz questions, based on Bloom’s Taxonomy, is presented. This classification demonstrates the range of cognitive demand in the AI-generated assessments, particularly at the medium difficulty setting. (Tests were all 20 marks; some questions were marked higher than others)

A typical breakdown of the cognitive levels targeted in the quiz questions is presented in Table 1: Question types below.

| Level | Count |

|---|---|

| Remember / Understand | 3–4 |

| Apply | 2–3 |

| Analyze | 2–3 |

| Evaluate | 1–2 |

Chat Logs

During the interaction with MindPath AI, the chat logs from each student interaction were saved and analyzed to study dialogue features like message count, word count, number of AI questions, and qualitative features via discourse analysis. This data was also collected on Supabase. From every student’s chat log history, 3 messages were randomly chosen and analysis was made based on that. In total, 90 messages were analysed. For the discourse analysis, the rubric in Table 2 was used.

| Category | Description | Indicator |

|---|---|---|

| Factual (F) | Provides direct knowledge or facts | Uses definitions, textbook-like explanations |

| Socratic Questioning (SQ) | Promotes reflection through open-ended questions | “What do you think…?”, “What would be an example?” |

| Scaffolding (S) | Builds understanding step-by-step, using scaffolding or sequencing | Breaks down concepts, uses real-life comparisons |

| Feedback (FB) | Affirms or corrects learner’s response with praise or elaboration | “Great job!”, “That’s right because…” |

| Clarification (C) | Gently corrects misconceptions or vague answers | Begins with “Not quite,” or rephrases learner input |

| Meta-cognitive (M) | Encourages reflection on learning or planning next steps | “How would you approach…?”, “Why is this the right answer?” |

This rubric was used to analyse the 90 messages and tag each with one or more of the categories. Based on the descriptions and indicators, the type of category was identified and counted. Some categories were intersecting so some messages were tagged with all relevant ones (instead of the most relevant). Both the discourse analysis rubric and classifications of messages was independently reviewed by two individuals to ensure reliability and pedagogical validity: a data analytics professional with experience in AI and machine learning, and an experienced physics educator with a strong background in higher education.

Survey

After the testing, students were asked to fill out a short survey to capture their perceptions of the AI tutor and their learning experience. The survey included items on engagement, pacing, usability, and feature preferences. The results were used primarily to inform potential improvements to the system and to contextualize the learning outcomes rather than serving as a primary outcome measure.

Procedure

A website was created and shared with the students that went step-by-step through the process. The website generated a random student ID to ensure anonymity and then opened the quiz website where the student entered the random ID, the topic, and selected the difficulty (medium). After the first quiz and the scoring feedback, the experimental group was directed to the AI chatbot for a focused learning session, while the control group was directed to review textbooks. The website then redirected both groups to the quiz site for the post-test. Four days later, both groups were asked to take a retention test. Students were informed beforehand that a third test would occur within a few days, but the exact date was not disclosed to avoid additional preparation. Students were asked not to study the same topic between sessions. All activities were designed to be anonymous, using randomly generated IDs over identifying information.

Variables and Measurements

The study used a mixed-methods approach to evaluate the impact of the AI tutor on learning outcomes and interaction quality. Learning outcomes were measured using pre-test, post-test, and delayed-retention test scores, each out of 20. This allowed for the calculation of absolute improvement, normalized gain, and retention gains or losses. Improvements were analyzed across groups (control vs. experimental) and across different grade levels. Additionally, the initial performance levels were used to better understand how students at different starting points responded to the intervention. Interaction quality was assessed by analyzing quantitative indicators such as message counts, average word length, the frequency of AI-asked questions, and the distribution of coded message categories (from the rubric). The user experience was captured via survey responses, including Likert-scale ratings (1–10) of engagement and pacing, as well as qualitative feedback about the perceived helpfulness and clarity of the AI’s responses, selecting from “too fast”, “Just right,” or “Too slow.”

Data Analysis

Statistical analysis was conducted to evaluate learning outcomes and interaction indicators. Descriptive statistics (means, standard deviations, ranges) were computed to summarize the consistency of performance across tests and groups. Paired ![]() -tests were used to assess the statistical significance of improvements from pre- to post-tests and post- to retention tests within groups. Cohen’s

-tests were used to assess the statistical significance of improvements from pre- to post-tests and post- to retention tests within groups. Cohen’s ![]() was calculated to quantify effect sizes. Outliers and declines in performance were investigated separately to understand individual variation. Subgroup analyses were performed by grade and initial performance levels to examine differential effects. Behavioral indicators such as time on task and completion persistence.

was calculated to quantify effect sizes. Outliers and declines in performance were investigated separately to understand individual variation. Subgroup analyses were performed by grade and initial performance levels to examine differential effects. Behavioral indicators such as time on task and completion persistence.

To verify the assumptions required for parametric testing, a Shapiro–Wilk test of normality was implemented; given the sample size of 30 per group and the absence of extreme outliers, and with minimal deviation from normality, parametric analysis was considered appropriate.

Ethical Considerations

All participants were informed prior to the study and their consent (including parental consent where applicable) was obtained. The study was approved by the school coordinator. No personal or sensitive data were collected; random IDs were used instead of names or identifying information. The procedures followed standard ethical guidelines for school-level research and prioritized student privacy.

Design and Functionality of the AI Tutor

The system has been deployed to GitHub for version control and collaboration. This enables efficient code management. The core user interface is powered by Streamlit, which allows for rapid prototyping and real-time web applications. Streamlit’s responsiveness makes it ideal for developing an interactive learning environment (including both the chatbot and quiz components). The AI chatbot is built using OpenAI’s GPT-4o API. The model has been set on a temperature of 1.08 to encourage creativity and variation in responses, while the maximum token limit is set to 200. This ensures responses are concise and easier for students to understand, promoting better focus and dialogue-based learning.

MindPath’s architecture is based on student-centered learning and phased content. Each lesson is structured into many phases: introduction, core content, interactive exercise, real-world example, summary, and a quiz. This ensures clarity, pacing, and engagement. The chatbot follows a logic where it asks the student for prior knowledge and then bases its content off that. This allows it to identify where the students need the most reinforcement. MindPath starts with one phase (introduction), and ends with a question. It only moves forward if the student responds with words like “ready,” “continue,” or “I’m ready”. This design helps the AI ensure comprehension and that the student is actively participating. Once a concept is understood, the system moves to the next phase, eventually asking the student to take a quiz.

The AI is also designed to be adaptive and motivational. Its tone remains encouraging and supportive, fostering a comfortable learning environment throughout. Real-time adjustments are made based on student responses. By tracking interaction history, the AI understands a student’s proficiency and chooses the topic’s complexity or its teaching speed accordingly. Alongside the chatbot is a custom-built quiz system that enables personalized, topic-based assessments. Students choose a topic and difficulty level (easy, medium, or hard), and the quiz generates corresponding question types (ranging from simple true/false to open-ended structured responses). The system uses array indices to manage test progression (two buttons: “previous question” or “next question”). This allows students to navigate freely, review and change answers, and use a “Need Help” button for hints. After submission, students receive detailed feedback for each question by MindPath. This includes correct answers, explanations, and, where necessary, follow-up suggestions.

Results

Experimental vs Control Group

To compute learning metrics (max score = 20), we used standard normalized gain formulations:

![]()

To calculate mean scores, gains, or normalized gains, the following formula was used:

![]()

A negative retention loss indicates that the retention score exceeded the post-test score.

| Grade | Mean Pre-Test / 20 | Mean Post-Test / 20 | Mean Retention / 20 | Mean Gain (Post–Pre) | Mean Normalized Gain | Mean Normalized Retention Gain | Mean Retention Loss (Post-Retention) |

|---|---|---|---|---|---|---|---|

| 5 | 11.67 | 15.42 | 15.00 | 3.75 | 0.39 | 0.30 | 0.42 |

| 6 | 11.50 | 16.50 | 15.58 | 5.00 | 0.56 | 0.44 | 0.92 |

| 7 | 12.17 | 15.75 | 16.17 | 3.58 | 0.47 | 0.53 | -0.42 |

| 8 | 10.83 | 15.92 | 13.50 | 5.08 | 0.38 | 0.19 | 2.42 |

| 9 | 10.50 | 15.25 | 16.33 | 4.75 | 0.49 | 0.57 | -1.08 |

Overall Learning Gains (Experimental): In this group, the 30 students scored an overall mean score of 11.33 / 20 in the pre-test while 15.76 in the post test. This is a 4.43-point increase (95% CI: 3.19 to 5.68), an increase of 39%. The mean normalized gain is 0.46, indicating that nearly half of the possible learning (based on the initial score gap) was achieved through the intervention, still leaving some room for improvement. A paired t-test revealed a statistically significant improvement between pre- and post-test scores: t(29) = 7.29, p < 0.00000005. Apha value used in all parts of this research is 0.05. This result indicates a highly significant difference in performance following the intervention. The effect size, measured by Cohen’s d, was 1.33 (95% CI: 0.96 to 1.71), which represents a large effect according to conventional benchmarks. This suggests that the intervention had a substantial and meaningful impact on student learning outcomes.

Retention (Experimental): In addition to immediate post-test gains, students also demonstrated strong knowledge retention. The mean retention score was 15.31, only slightly lower than the post-test mean of 15.76, indicating a minimal retention loss of 0.45 points. Compared to the pre-test mean of 11.33, the average retained gain was 3.98 points and the 95% CI ranged from [2.62, 5.34]. A paired t-test comparing pre-test and retention scores confirmed a statistically significant improvement: t(29) = 5.99, p < 0.0001. The Cohen’s d was 1.09 (95% CI: 0.72, 1.47), indicating a large effect size. These results suggest that the learning was not only effective in the short term but also largely retained over time for this group.

Grade-level Performance (Experimental): When analyzed by grade level, the results suggest that MindPath AI was effective across all age groups, including younger students. Notably, Grade 6 students showed the highest normalized learning gain (0.56), indicating that the AI tutor was particularly impactful in supporting conceptual growth at this level. Meanwhile, Grade 9 students demonstrated the highest normalized retention gain (0.57), suggesting strong long-term understanding. Interestingly, Grade 8 students showed relatively lower retention gains (0.19), despite solid post-test performance, which may indicate challenges in consolidating learning. Overall, the tool appears to benefit both middle and upper grade levels, with younger students (Grades 5–6) achieving comparable or even greater gains in some metrics than older peers, highlighting MindPath AI’s accessibility and adaptability for diverse learners.

| Grade | Mean Pre-Test / 20 | Mean Post-Test / 20 | Mean Retention / 20 | Mean Gain (Post–Pre) | Mean Normalized Gain | Mean Normalized Retention Gain | Mean Retention Loss (Post–Retention) |

|---|---|---|---|---|---|---|---|

| 5 | 11.67 | 14.50 | 13.50 | 2.83 | 0.46 | 0.12 | 1.00 |

| 6 | 13.00 | 13.92 | 14.33 | 0.92 | 0.09 | 0.15 | |

| 7 | 14.00 | 15.75 | 15.08 | 1.75 | 0.32 | 0.07 | 0.67 |

| 8 | 12.17 | 13.17 | 13.08 | 1.00 | 0.10 | 0.11 | 0.08 |

| 9 | 12.17 | 14.17 | 13.50 | 2.00 | 0.27 | 0.19 | 0.67 |

Overall Learning Gains (Control): In the control group, students achieved a mean pre-test score of 12.6 / 20, increasing to a mean post-test score of 14.3 / 20, reflecting a modest gain of 1.7 points, 13.5% [CI 95% of 0.87,2.53] A paired t-test confirmed this improvement was statistically significant, with a Cohen’s d effect size of 0.77, indicating a moderate impact. While there was learning progress, the mean normalized gain was 0.248, significantly lower than that of the experimental group, suggesting that students achieved only about a quarter of their potential conceptual growth without the AI support.

Retention (Control): Retention data further illustrates the limited depth of learning. The mean retention score was 13.9 / 20, leading to a mean retained gain of 1.3 points—lower than the post-test gain. The mean retention loss from post-test to delayed retention was 0.4 points, consistent with typical curves. A paired t-test comparing pre-test to retention scores showed statistically significant improvement, with a Cohen’s d of 0.65, a medium effect size. However, the mean normalized retention gain was only 0.128, suggesting that long-term retention without the AI tool was relatively limited. Normalized retention scores across grades ranged narrowly, from 0.07 to 0.19, implying minimal depth in retained understanding across all levels.

Grade-level Performance (Control): When broken down by grade, results show that no specific age group stood out in terms of substantial learning or retention. Grade 5 students had the highest raw gain (2.83 points), but this was paired with a significant retention loss of 1 point. Grade 6 had the lowest normalized gain (0.09) but interestingly showed a slight increase in retention scores (0.15 normalised retention gain), possibly reflecting delayed consolidation. Grades 7–9 all showed gains around 1–2 points but with normalized retention gains close to zero, indicating surface-level recall. Overall, while some short-term improvements were evident, the lack of lasting learning was present.

Direct Comparison: When comparing the two groups, the experimental group using MindPath AI significantly outperformed the control group across all metrics. While both groups showed statistically significant improvements from pre- to post-test, the experimental group achieved a mean gain of 4.43 points compared to 1.7 points in the control, with a larger effect size (d = 1.33 vs. 0.77). Furthermore, normalized learning gains were nearly double in the experimental group (0.46 vs. 0.23), highlighting the AI tutor’s stronger impact on conceptual understanding. Importantly, retention gains were also more robust: the experimental group retained an average of 4.21 points, while the control group retained only 1.3, with normalized retention gains of 0.41 vs. 0.18 respectively. These differences suggest that MindPath AI not only facilitated short-term performance gains but also promoted deeper, more durable learning.

Correlation between Pre-Test Scores and Gain

The graph shown in Figure 1 indicates a strong negative correlation between pre-test scores and learning gains, with r = –0.69. This suggests that students who began with lower initial understanding tended to experience greater improvements, highlighting the potential of the intervention—particularly MindPath AI—to close learning gaps and support lower-performing students effectively. This inverse relationship is consistent with ceiling effects in education, where students starting with lower prior knowledge often show greater measurable gains as they have more room to improve. Visually, the scatterplot shows a clear downward slope, supporting the idea that MindPath AI was beneficial for struggling learners. While the relationship is strong, some variability is present, suggesting that factors beyond prior knowledge may also influence individual learning gains.

Discourse Analysis

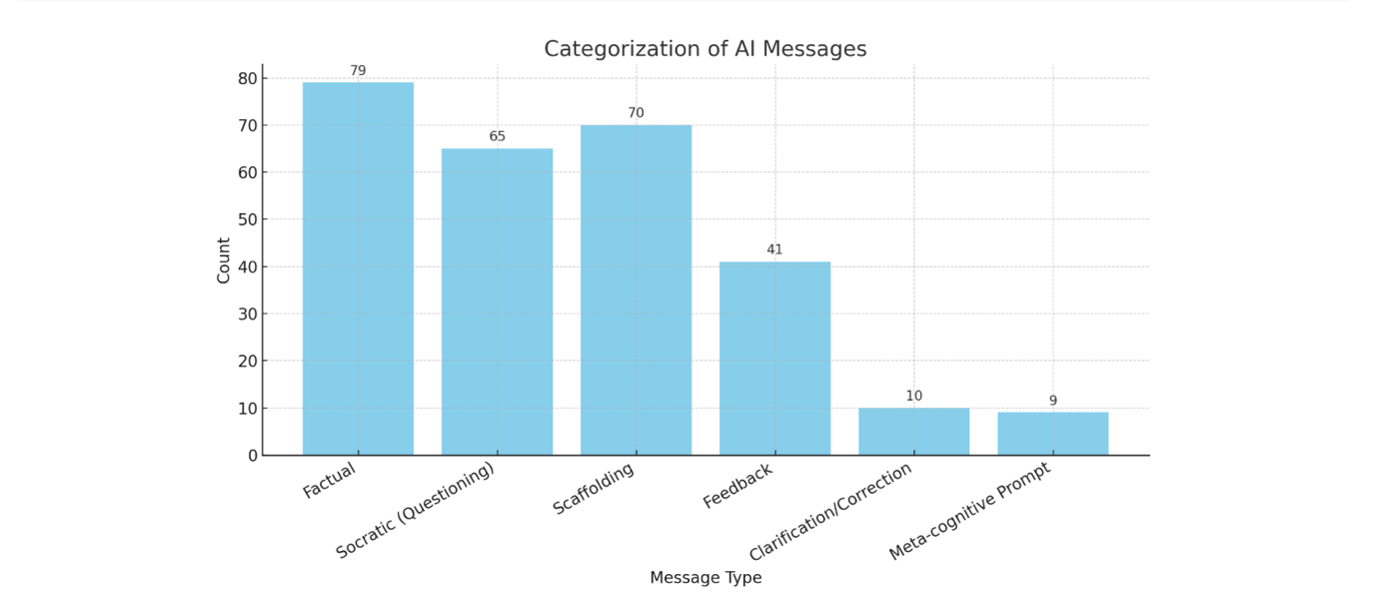

Note: both numbers and percentages will be overlapping as some messages were tagged with more than one category

The bar graph reveals that the majority of messages were Factual in nature, accounting for 63.7% (79 messages) of all interactions. This suggests that the AI frequently provided direct information, which is useful for reinforcing key concepts. However, nearly as prominent were Socratic messages (52.4%) and Scaffolding messages (56.5%), indicating that the AI also engaged learners through questioning and structured guidance which are both key strategies for promoting active cognitive engagement. The presence of Feedback in 33.1% of messages further reflects the AI’s ability to support learning through response evaluation. Less frequent but pedagogically important were Clarification/Correction (8.0%) and Meta-cognitive Prompts (7.3%), which suggest some engagement with self-regulation and error awareness, albeit at a lower rate. Overall, the distribution demonstrates that MindPath AI blended facts with cognitive support, aligning well with models like ICAP and the Community of Inquiry framework, especially in fostering interactive dialogue.

Table 5 | Chatbot Analysis Summary

| Total messages analysed | 90 |

| Total words (all messages) | 7,622 |

| Mean words per message | 84.7 |

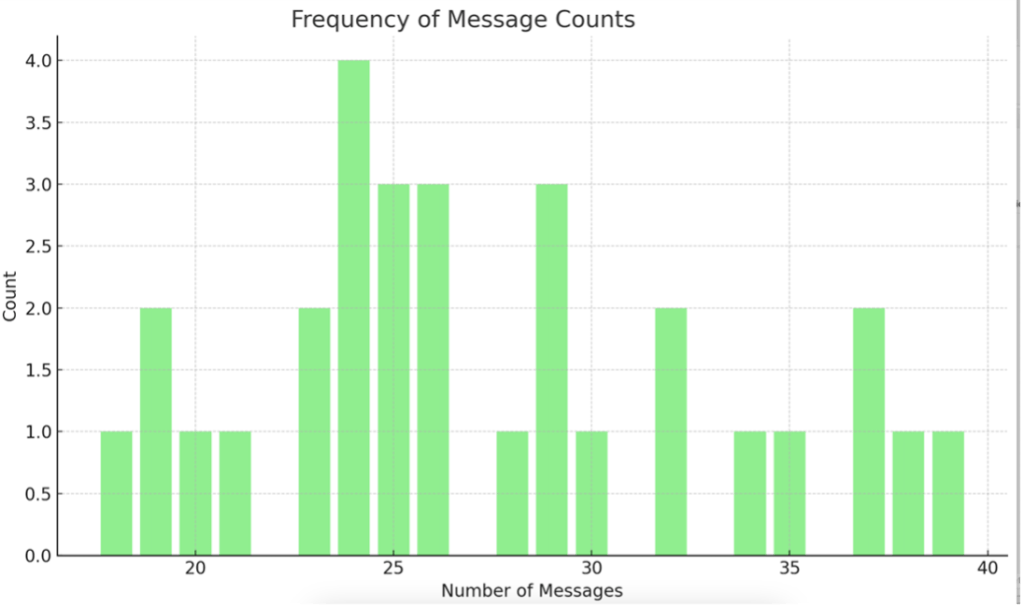

This shows that the AI, on average, used 84.7 words per message across the 6 categories. The graph shows that the range of number of messages was 21 with the longest interaction consisting of 39 messages and lowest of 18. The mode number of messages was 24 with 4 students having a session of this length.

The graph shows that the range of number of messages was 21 with the longest interaction consisting of 39 messages and lowest of 18. The mode number of messages was 24 with 4 students having a session of this length. The mean is calculated to be 27.4 messages. This means that on average, every student-AI interaction lasted for 27.4 messages. These messages maintained a 1:1 student-to-AI ratio with a student initiating the conversation with a topic and the AI ending it once the topic has been covered. This indicates the persistance of the students as they interacted diligently with the AI until the entire topic was taught. An analysis of one entire interaction was conducted (and reviewed by a data analytic professional).

The AI-tutor conversation on the solar system showcases a responsive and encouraging dialogue with a mix of fact-based instruction, inquiry-based, and creative approaches. The AI often presents explicit information—e.g., describing why Venus is warmer than Mercury—but couples this with open questions that require the learner to be critical or remember previous information, such as inquiring why Venus is the twin of Earth. This balance of questioning and explanation creates a consistent pattern throughout the session. The AI also scaffolds learning by leading the student through creative scenarios, such as imagining life on Mars, and flexibly adapts when the student rejects an activity, providing an alternative that keeps the student engaged. Positive feedback and affirmations are interwoven, building confidence and curiosity. Occasionally, the AI corrects misinformation, always maintaining a positive tone. Together, the conversation reflects an interactive, student-centered approach for supporting exploration, reflection, and concept development.

User Feedback

A survey was conducted to get qualitative and quantitative user feedback. Its results are summarised in the following chart.

To measure the student perception of how fast/slow the AI was going, the students were asked “Was the AI too fast, too slow, or just right in guiding you?”

The results show that 67.6% of students found the speed “just right” while 24.3% found it slow. This is used as support and feedback only.

To understand why some students had a decrease in scores (either in post-test or retention), they were asked this open-ended question: “If you had a decrease in either the second or third test from the first, what might’ve been the reason?” Responses pointed to a variety of personal and situational factors. Students commonly cited fatigue, or distraction during the test. However, two mentioned forgetting material over time or not understanding it in first place.

Discussion

This study explored the impact of a generative AI tutor, MindPath, on student learning outcomes, engagement, and retention through inquiry-based, scaffolded interactions. The findings indicate a statistically significant improvement in student performance for the experimental group, which used MindPath, with a mean score increase of 4.43 points (from 11.33 to 15.76 out of 20), representing a 39% improvement and a large effect size (d = 1.33). This is both educationally and statistically significant as retention scores remained high (15.31), indicating that learning was not only immediate but also largely retained. In contrast, the control group showed modest gains of 1.7 points with lower normalized learning and retention gains. Discourse analysis revealed a strong presence of factual, scaffolding, and Socratic questioning strategies. Survey feedback suggested that most students found the AI’s pacing and questioning approach conducive to focused and active learning. A strong negative correlation (r = –0.69) between pre-test scores and learning gains also suggests that students with initially lower understanding experienced the greatest benefits.

More analysis of discourse showed that the AI tutor had students in elaborate question-driven dialogue with frequent on-average 26.4 message interaction, each being a mean of 84.7 words. This suggests the presence of conceptual scaffolding. Frequent probe questions were asked by MindPath, with pauses for response from students, and constructing its next message from prior input. This exchange simulates the “elaboration” and “active” modes of the ICAP framework, in which learners must process and react to information rather than receive it passively. Notably, the form of the conversation promoted persistence: the fact that almost all participants worked through the entire session, given that the interaction was an iterative question–answer, indicates ongoing cognitive engagement and motivation. This tenacity indicates that even students who struggled with the content were still eager to keep engaging. While causality cannot be firmly established, this pattern may reflect a valuable pedagogical affordance of AI tutors: when designed to be responsive, low-friction, and interactive, they can help sustain motivation and foster a sense of progress and mastery.

The conversation of the AI tutor regarding the solar system illustrates the effectiveness of a student-centered dialogue in fostering engagement and promoting conceptual understanding. The AI promotes not only the acquisition of knowledge but also higher-order thinking by weaving together fact-based instruction with open-ended questions and imaginative prompts. The tutor’s alternating between explanation and inquiry fits with the “constructive” and “interactive” engagement levels of the ICAP framework, which are known to foster deeper learning. The AI’s capacity for dynamic adaptation in the face of a student’s resistance to an activity, all the while preserving momentum and motivation, is a vital characteristic of successful tutoring.

This research contributes to the growing body of work in AI in education by giving attention to the structured, pedagogical dialogues that occur with AI systems. Unlike many existing tools that focus on providing answers or summarizing content, MindPath was able to help students through lessons and guided inquiry questioning, demonstrating alignment with the ICAP model and CoP frameworks. MindPath’s ability to offer real-time individualized support, as well as foster cognitive engagement, shows the technology’s capacity to transform one-size-fits-all instruction in teacher-scarce, heavily crowded classrooms. Moreover, the system’s instruction design—including pacing, feedback, and the use of scaffolding demonstrates adaptive, responsive automation, broadening the boundaries of what AI-powered tutoring could offer.

The research objectives focused on whether an AI tutor with phased and inquiry-based conversation could enhance student learning and engagement. The outcomes show that these objectives were achieved. Students improved in test scores, retained knowledge well, and indicated high levels of engagement. In addition, the tool seemed especially useful for low-performing students, providing evidence that such AI may be able to assist educational equity. While the study didn’t test further long-term retention after the delayed test, the results provide promising preliminary evidence supporting MindPath’s design principles. No major discrepancies emerged, though the small decline in scores for a few students or across grade levels indicates there may be a requirement for follow-up reinforcement methods or further adaptation to learner needs in subsequent AI implementations.

Disaggregated by grade level, the data aligns to show that MindPath was overall effective for all student groups with some significant variation. Grade 6 students had the highest normalized learning gain of 0.56, indicating that younger students particularly gained from the structured and interactive nature of the AI teaching. This could be attributed to the fact that students at this stage of development are extremely responsive to scaffolding and guided dialogue. Grade 9 students had the greatest retention gain (0.57), which suggests that the older students were more capable of consolidating and remembering information following the session. Surprisingly, Grade 8 students had good post-test performance but the lowest retention gain (0.19), suggesting that though initial comprehension was high, reinforcement or consolidation was poor. This hints at a possible threshold of cognition at which learners will need increased repetition or metacognitive assistance in order to remember complex material.

In the control group, stratified results were far less consistent. No grade showed exceptional learning or retention gains, and the normalized gains across grades were relatively flat, ranging from 0.07 to 0.19. This contrast suggests MindPath may have the potential to better support different developmental levels providing younger learners with the scaffolding they need while allowing older students to deepen and retain knowledge over time.These findings indicate that stratified implementation may hold promise in schools, as they may be especially effective in supplementing traditional teaching methods across diverse grade levels.

Based on these findings, four recommendations emerge. (1) Teachers can possibly adopt AI tutors such as MindPath as an adjunct to facilitate individualized learning, particularly in large or heterogeneous classrooms. (2) Educative AI designers can focus on dialogic structures, scaffolding, and instant feedback, more than simply giving the “right” answer. (3) Long-term research would investigate the implications of these types of AI tools on retention of knowledge in many subjects by larger and more heterogeneous student groups. (4) Research is also needed to examine how these instruments work with learners of diverse academic skills, languages, or learning needs to determine inclusivity and accessibility.

This research has a number of limitations. First, the sample only consisted of 30 students in the experimental group and 30 in the control group, limiting the generalizability. Second, as participants were aware they were in a study, there is a possible Hawthorne effect that may have briefly elevated motivation and participation12. Though, this effect is reduced via the retention test. Finally, the study involved a brief intervention in learning and did not assess outcomes over extended periods or in high-stakes assessments so the long-term effects of the tool remained untested.

Conclusion

As AI continues to shape the future of education, tools like MindPath suggest that intelligent tutoring systems can do more than deliver content: they can actively engage students in the learning process. This study represents an early step, showing that when designed with pedagogically grounded approaches, AI has the potential to make learning more personalized, interactive, and accessible. Future developments and studies can build on this work to further explore and enhance the quality and reach of AI-powered learning experiences. The present goal with MindPath is to examine how conversational AI, when used carefully and ethically, can support student learning without diminishing the essential human aspects of education.

Acknowledgements

Thank you to the data analytic professional and physics educator for their help in reviewing aspects of this paper.

References

- IBM. (2023). What are large language models (LLMs)? IBM.com. https://www.ibm.com/think/topics/large-language-models. [↩]

- Gounder, N., Ramdass, K., Naidoo, S., & Essop, F. (2024). Integrating adaptive intelligent tutoring systems into private higher educational institutes. 2024 IST-Africa Conference (IST-Africa), 1–13. https://doi.org/10.23919/ist-africa63983.2024.10569565. [↩] [↩]

- VanLehn, K. (2011). The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems. Educational Psychologist, 46(4), 197–221. https://doi.org/10.1080/00461520.2011.611369. [↩]

- Miller, P., & DiCerbo, K. (2024). LLM based math tutoring: Challenges and dataset. ResearchGate. https://www.researchgate.net/publication/381977801_LLM_Based_Math_Tutoring_Challenges_and_Dataset. [↩]

- Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. https://doi.org/10.1080/00461520.2014.965823. [↩]

- Kurt, S. (2024). The Community of Inquiry model (CoI framework) – Educational technology. Educational Technology. https://educationaltechnology.net/the-community-of-inquiry-model-coi-framework/. [↩]

- Indriati, L., Nugroho, H., & Hanindhito, B. (2023). Improving learning content and engaging learners through the revised Community of Inquiry (RCoI) framework: Indonesian design students’ perspectives. 15th International Conference on Education and New Learning Technologies, 927–933. https://doi.org/10.21125/edulearn.2023.0338. [↩]

- Fryer, L. K., & Thoeni, A. (2024). Marketing GenAI tutor experiment. SSRN. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4900074. [↩]

- Yavich, R. (2025). Will the use of AI undermine students’ independent thinking? Education Sciences, 15(6), 669. https://doi.org/10.3390/educsci15060669. [↩]

- Bastani, H., Kane, L., Krishnan, S., & Mardani, R. (2024). Generative AI can harm learning. SSRN. https://doi.org/10.2139/ssrn.4895486. [↩]

- Rosé, C. P., Wang, Y. C., Cui, Y., Arguello, J., Stegmann, K., Weinberger, A., & Fischer, F. (2008). Analyzing collaborative learning processes automatically: Exploiting the advances of computational linguistics in computer-supported collaborative learning. International Journal of Computer-Supported Collaborative Learning, 3(3), 237–271. https://doi.org/10.1007/s11412-007-9034-0. [↩]

- Adair, J. G. (1984). The human subject: Ethical guidelines and institutional review boards. PsycNet APA. https://psycnet.apa.org/record/1984-22073-001. [↩]

Amazing… very well written. It was very interesting to read