Abstract

Large Language Models (LLMs) can be used for creative story writing. The process begins with a text prompt which is refined iteratively to align the LLM’s output closely with user expectations. However, each iteration demands extensive reading and evaluation, which is labor-intensive and time-consuming. This inefficiency is problematic in creative story writing. Traditional prompting methods are text-based, requiring users to carefully craft and experiment with different prompts. Recent advances in prompt engineering, such as Few-Shot Prompting and Chain-of-Thought (CoT) Prompting, have improved LLM performance for creative tasks by providing structured examples and decomposing complex instructions. However, these text-centric approaches remain limited by the labor-intensive process of reading and refining lengthy outputs, as well as by LLMs’ restricted context windows and occasional loss of narrative coherence.This study introduces visual prompt engineering, a novel technique in which text prompts are first transformed into visual representations using diffusion models. These visuals are more efficient for humans to process through their intuition, which allows faster iterations before converting the visuals back into text prompts. Experiments show that visual prompting (Method B) reduced the number of iterations by 39-52% to reach the same quality as compared to the text-only baseline (Method A). Through visual prompt engineering, we can improve the efficiency of LLM-based storytelling without sacrificing narrative quality. Visual prompt engineering can be effective in mitigating writer’s block and can also benefit people with learning disabilities.

Background

Large Language Models have revolutionized various fields such as creative story writing. It is especially effective in mitigating writer’s block. This is usually through prompt engineering, which optimizes text inputs to steer the models to generate stories that are closer to the user’s expectations. Advanced techniques like Few-Shot Prompting1, Chain-of-Thought (CoT) Prompting2, and ReAct (Reason + Act)3 further enhance LLM performance by providing examples, breaking down complex questions into logical steps, and eliciting advanced reasoning and planning. Additionally, multimodal AI systems, leveraging visual-textual grounding4 and image-text correspondence, can further improve the function of LLMs by enabling them to process and generate content across different modalities, such as text and images5.

Despite these advancements, leveraging LLMs to create high-quality stories presents significant challenges. The iterative process of text generation is inherently time-consuming and energy-intensive6, often requiring careful tuning of prompts and multiple rounds of generation to achieve the desired outcome. Additionally, the limited context window of most LLMs restricts their ability to maintain narrative coherence over longer texts, frequently leading to inconsistencies, repetition, or forgotten plot points7. Other common problems include drifting off-topic, generic or formulaic language8, and the need for substantial post-editing to produce polished work9.

1: Iterative Prompting

Iterative prompting is a step-by-step method for steering a large language model (LLM) toward better answers by continually revising the instructions it receives. The workflow resembles an ongoing conversation: you supply an initial, often broad prompt—say, “Generate a story about a bear”—and let the model respond. That first output is immediately evaluated for relevance, accuracy, style, or creativity. Based on what you see, you adjust the wording or add detail—perhaps specifying “a brown bear” or clarifying tone, length, or plot points. This can be done manually, with a human judging each draft, or automatically, through an internal feedback loop that scores responses and rewrites prompts without human intervention.

Once the prompt is refined, it is sent back to the model, generating a fresh response that is again reviewed against the user’s expectations. The generate-analyze-tweak cycle repeats until the output meets predefined quality thresholds—whether that means factual correctness, narrative flair, or alignment with brand voice. By closing the loop multiple times, iterative prompting incrementally “locks in” the model’s behavior, ensuring its answers converge on what the user actually desires rather than what the initial, less specific prompt might have produced.

Despite its effectiveness, iterative prompting has several limitations. The process is time-consuming, as users are required to read and evaluate the generated stories after each iteration, as well as optimizing the text prompt, which is labor-intensive and time-consuming.

2: Auto Prompting

Auto prompting is an automated approach to writing input prompts for large language models (LLMs). Instead of relying on manual prompt engineering, the technique uses algorithms—or smaller, specialized models—to craft instructions that reliably draw out strong responses. This reduces human labor while increasing output consistency and efficiency, making it valuable for chatbots, content-generation pipelines, and other interactive AI systems where timely, context-aware answers are essential. The process starts when a user supplies an initial prompt and a set of labeled training data that reflects the desired task or response style.

With that data in hand, the system trains a masked language model (MLM) to understand the underlying patterns. The trained MLM then proposes candidate prompts, which are automatically scored against the labeled data. Prompts that perform well are kept, tweaked, and re-evaluated in a feedback loop, while weaker candidates are discarded. This refinement cycle repeats until the candidate prompt reliably elicits high-quality outputs from the target LLM according to predefined criteria—such as accuracy, fluency, or style fit. When the optimized prompt meets those benchmarks, the system returns it to the user, ready for deployment in production.

In this study, we will focus on applying iterative prompting and visual prompting to creative story writing. Auto Prompting in creative story writing is another possibility, but it is outside of the scope of this paper.

3: Problem Statement and Rationale

Iterative prompting is effective in creative story writing12, but is inefficient due to the number of iterations needed to reach a certain quality. Each iteration is time-consuming and labor-intensive.

4: Significance and Purpose

This study introduces visual prompt engineering, a novel approach on top of iterative prompting, aiming to reduce the iterations to generate high quality stories. Visual prompt engineering can be effective in mitigating writer’s block and can also benefit people with learning disabilities.

5: Objective

The objective of this study is to compare the effectiveness of visual prompt engineering against traditional text-based prompting in the context of story generation.

6: Scope and Limitations

The study uses prompts to generate creative stories of approximately 200 or 500 words. The prompts consist of only one subject due to the limitations of diffusion models. The 200/500 word limit is the average length for a short story, which is usually enough to mitigate writer’s block. 40 volunteers were recruited to iteratively refine the prompts following instructions. We used different GPT models to generate and evaluate stories. GPT-4o was used to generate stories, while GPT-o3 was used to grade the generated stories. A small number of generated stories were sampled and evaluated by humans to verify the consistency of GPT-o3’s grading.

7: Methodology Overview

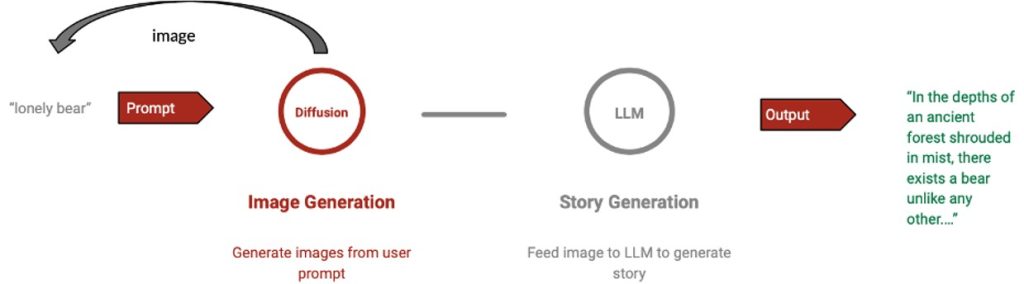

In this study we used GPT-4o to generate stories using 60 prompts covering a balanced mix of genres:

- Comedy

- Fantasy

- Horror

- Romance

Method A follows the traditional text-based iterative prompting to generate a story. Method B first sends the prompt to DALL-E 3 to generate three images. Based on these three images, the users modify the existing prompt, which can be repeated several times until satisfied. This new prompt is sent to GPT-4o for story generation. We capped output at either 200 or 500 words with a 10% margin of error. The process is repeated until the story reaches a certain quality bar (8, 8.5, 9), as evaluated by GPT-o3.

Diagrams of the two methods are shown below:

GPT-o3 graded the stories on a 0-10 scale using a five-dimension rubric as explained in the detailed Methodology section.

Results

| Story quality | # of iterations needed (method A) with SD | # of iterations needed (method B) with SD | Percent reduction |

| 8 | 4.3 ± 0.35 | 2.2 ± 0.32 | 49% |

| 8.5 | 6.5 ± 0.25 | 3.6 ± 0.31 | 45% |

| 9 | 8.4 ± 0.33 | 5.1 ± 0.28 | 39% |

| Story quality | # of iterations needed (method A) with SD | # of iterations needed (method B) with SD | Percent reduction |

| 8 | 2.5 ± 0.55 | 1.2 ± 0.46 | 52% |

| 8.5 | 4.4 ± 0.37 | 2.3 ± 0.29 | 47% |

| 9 | 6.6 ± 0.31 | 3.8 ± 0.24 | 42% |

Across all target story qualities, Method B consistently reaches the same story quality with roughly 39 – 52% fewer iterations than Method A.

As the target word length increases, less iterations are needed to reach a given quality. This is because the LLM is able to write a more detailed story, which includes elements of character development and originality.

Sample Data

Both stories are roughly 200 words, generated from the prompt “a lonely bear”. Both of these stories reached the same quality (8.5 as measured by GPT-o3). Method A needed four iterations while Method B only needed two.

Method A’s story is shown below:

Once upon a time, in a vast and dense forest nestled between towering mountains, there lived a bear named Oliver. Oliver was not like the other bears in the forest. While they roamed in groups, playing and hunting together, Oliver preferred the solace of his own company. He was a solitary soul, finding solace in the quietness of the woods. From a young age, Oliver had felt different. While the other bear cubs wrestled and played, he often found himself wandering off, drawn to the tranquility of the forest. His parents worried about him, urging him to join the other cubs in their activities, but Oliver couldn’t shake off the feeling of isolation that seemed to permeate his very being. Oliver’s solitary nature became more pronounced as he grew older. He found joy in exploring the hidden paths of the forest, discovering its secrets and observing its inhabitants from a distance. His keen senses and quiet demeanor earned him a reputation among the animals as a thoughtful and observant bear. While his fellow bears engaged in boisterous activities, Oliver would often be found near a babbling brook or under the shade of ancient trees, lost in thought.

Method B’s story is shown below:

In the depths of an ancient forest shrouded in mist, there exists a bear unlike any other. Named Baran, he was born with an extraordinary coat, a soft golden-brown hue that shimmered when the rare sunlight filtered through the dense canopy. Despite his magnificent appearance, Baran was a solitary figure, his heart weighed heavy with a deep longing for companionship. Baran’s mother had often told him stories of the world beyond their forest, of lands filled with bears of various kinds and tales of grand adventures. But those were just stories. In reality, Baran’s forest was remote, and he remained the last of his kind in these parts, a gentle giant whose voice had not found an echo for years. Baran’s days were filled with quiet contemplation and exploration. He would roam the moss-covered trails, pausing to listen to the whispers of the ancient trees and the melodies of the forest creatures. Despite his solitude, he harbored a flicker of hope that one day he might encounter another bear like himself. His golden-brown coat stood out against the lush greens and earthy browns of the forest, a beacon of his uniqueness in the vast wilderness. Yet, with each passing season, his longing for companionship deepened, echoing through the silent woods.

Method B’s image is shown here:

Methodology

To evaluate the effectiveness of image-based prompt engineering, we undertook a comprehensive study involving the comparison of an iterative prompting method and image-based prompt engineering to test the efficiency of our approach.

1: Research Design

This study followed a comparative experimental design to evaluate two different methods of prompt refinement for creative story writing. Both methods were applied to the same pool of story prompts to isolate the effect of the prompting technique. Method A used traditional text-based iterations, while Method B incorporated a visual processing step using image generation before story creation.

2: Participants/Sample

The study used 200 unique story subjects generated by the researcher, each representing a distinct narrative seed across different genres (e.g., “a lonely bear”). Of these, 60 prompts were selected for controlled comparison across both experimental conditions (Method A and Method B), ensuring identical content bases. In addition to the prompts, 40 human participants were involved in iteratively refining them. The participants were high schoolers (25 male, 15 female, average age of 17.7). These participants engaged with the LLM by analyzing either generated text (Method A) or images (Method B), then modifying the prompts to better align with their intended narrative goals. All participants contributed anonymously, and their role was limited to guiding the model’s outputs without providing personal or identifiable information.

3: Data Collection

60 prompts were randomly sampled and used for both experimental conditions. Each prompt was assigned to two of 40 participants, who iteratively refined it with either Method A or Method B until the resulting story met its pre-specified quality target (rubric scores 8, 8.5, and 9). The platform automatically logged every refinement, yielding an iteration count for each prompt-story pair. Once the target quality was confirmed, the final stories and their iteration logs were exported as comma-separated value (CSV) files for subsequent statistical analysis.

4: Variables/Measurements

| Variable | Type of Variable | Description |

| Type of Method | Independent | Either Method A (traditional iterative prompting) or Method B (visual prompting) |

| Number of iterations | Dependent | Iterations required to reach a given overall story quality score. |

| Initial prompt content | Control | Each pair of stories (from Method A and Method B) originated from the same initial subject prompt to ensure comparable thematic foundations. |

| Word Count | Control | Stories were constrained to either 200 or 500 words with a ±10% margin to eliminate length-based bias in evaluation. |

| Target Story Quality | Control | The final story quality is the same for both methods. It is set to either 8, 8.5, or 9 / 10. |

| Subject Complexity | Control | All story subjects were limited to a maximum of 5 words to standardize complexity. Additionally all story subjects contained at most one protagonist. |

| Participant Expertise | Control | All 40 writers (20 per method) were 11th or 12th grade high-school students, standardizing skill level and visual processing ability. |

Each story generated through either method was graded using GPT-o3 on a scale of 1 to 10 across five narrative dimensions:

- Setting: The vividness, clarity, and immersiveness of the story’s setting.

- Character Development: The depth, realism, and growth of the characters throughout the narrative.

- Mood/Tone: The effectiveness and consistency of the story’s emotional tone or atmosphere.

- User Engagement: The extent to which the story captivates and sustains reader interest.

- Originality: The creativity and uniqueness of the story’s plot, themes, and ideas.

An average score was computed from these five dimensions to represent each story’s overall quality.

5: Procedure

1) Prompt Generation: Each of the 60 story prompts was routed through two parallel methods: (1) Method A (text-only), in which the prompt was sent directly to GPT-4o for story generation, and (2) Method B (visual-anchored), in which the same prompt first produced an illustrative image via DALL-E 3; that image, paired with the original prompt, was then supplied to GPT-4o to generate the story. When the user looks at the generated images, they often get better intuition on how to improve the prompt.

2) Story Generation: Users generate a story using GPT-4o. Every story must land within ±10 % of its target length (200/500 words). The story is regenerated until the story is within target length.

3) Repeat: Repeat steps 1–2 10 times. All generated stories and their prompt for each iteration are saved for later grading.

Data Analysis

All generated stories are graded by GPT-o3. The minimum iterations to reach the target score (8, 8.5, 9) are recorded and averaged. Additionally the standard deviation and percent reduction from Method A to method B are calculated.

Ethical Considerations

Human subjects were involved in this study solely to conduct iterative refinements during the story generation process. These participants did not submit personal data, nor were they evaluated, identified, or analyzed in any way. All story content, outputs, and evaluations were generated and processed by AI systems. No private, sensitive, or identifiable information was collected, stored, or used at any point in the study, ensuring minimal ethical risk.

Conclusion

1: Restatement of Key Findings

The integration of visual prompting into creative story writing presents a promising avenue for improving efficiency to overcome writer’s block. The findings of our study demonstrate that visual prompt engineering for creative story writing consistently reaches the same story quality with roughly 39 – 52% fewer iterations than traditional iterative prompting.

2: Implications and Significance

Visual prompt engineering allows users to incorporate tone, setting, and mood into the prompts more efficiently, mitigating writer’s block and creating better stories in fewer iterations. For instance, a mist-shrouded castle or derelict starship instantly suggests characters, conflicts, and stakes. Users can gain a clear starting point and escape creative ruts more quickly, all of which translates to richer narratives and higher productivity.

3: Connection to Objectives

The objective of this study is to compare the effectiveness of visual prompt engineering against traditional text-based prompting in the context of story generation, specifically evaluating improvements in efficiency. Visuals are more efficient for humans to process through their intuition, which allows faster iterations before converting the visuals back into text prompts. Experiments show that visual prompting (Method B) reduced the number of iterations by 39-52% to reach the same quality as compared to the text-only baseline (Method A). Through visual prompt engineering, we can improve the efficiency of LLM-based storytelling without sacrificing narrative quality.

4: Recommendations

Future research should explore the potential of integrating visual prompts in various text generation tasks beyond storytelling. For instance, visual prompts could be used to enhance the creation of informative articles by providing a visual context that helps the LLM generate more accurate and detailed content.

Another promising area for future research is the development of techniques to handle more complex prompts. Currently, the focus has been on single-subject prompts, but more intricate prompts could lead to richer and more diverse narratives. For example, a prompt combining multiple characters, settings, and plot elements could produce a more elaborate and engaging story. Developing methods to effectively manage and refine these complex prompts will enhance the creative capabilities of LLMs and allow for the generation of more sophisticated and nuanced content.

Visual prompting is a modular add-on rather than a standalone technique, so it can be layered onto existing strategies (automated prompt tuning or chain-of-thought workflows) to create hybrid pipelines that may further improve efficiency. Evaluating these combinations at scale, with diverse tasks and larger participant pools, will indicate whether the extra visual step delivers net benefits.

5: Limitations

First, quality does not always increase with each iteration due to varying user expertise. In the case that no iterations reach a target quality, the data is excluded.

Second, generated visuals from diffusion models are not always relevant and require several tries. We expect that with improvements in diffusion models, this limitation will be less problematic.

Third, complicated prompts or multi-threaded plots often confused the diffusion model, leading to incoherent images. For this reason the study is limited to single-subject prompts.

Fourth, as the length of the story approaches novel length, it becomes more and more expensive to generate prompts and evaluate the story. Novel writing is not the main application for visual prompting, but users can break down a novel into separate short stories and apply visual prompting to each.

Additionally, stories were graded using a specific rubric. While the rubric contains important features which every story should have, it doesn’t include elements such as cohesion and readability. The Automated Readability Index (ARI)13 can be applied in future experiments.

We initially used human volunteers to grade the stories. However, there is a large variance in scores assigned by human graders, making results expensive and inconsistent. Switching to AI improved the consistency of grading, but its validity largely depends on the quality of the rubric.

Some subjects are harder to develop into engaging stories. Including these subjects introduces larger variance in final grading. In this study we excluded such outliers to ensure consistent grading.

6: Closing thoughts

Overall, incorporating a diffusion model to enhance prompt engineering, particularly in creative story writing, has proven to be more efficient. By integrating visual prompts, the iterative process of refining and generating stories is streamlined, increasing the efficiency compared to traditional methods. Visual prompt engineering can also be integrated into other existing methods, such as auto prompting; however, that is outside the scope of this experiment.

Acknowledgements

I would like to express my heartfelt gratitude to the dedicated volunteers and the thoughtful reviewer who contributed their time and expertise to this project. Their constructive feedback, encouragement, and insightful perspectives were instrumental in refining my work and helping me grow throughout this process. I am deeply appreciative of their contributions and commitment.

References

- T. B. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, P. Dhariwal, D. Amodei, I. Sutskever. Language models are few-shot learners. Advances in Neural Information Processing Systems 33, 1877-1901 (2020). [↩]

- J. Wei, X. Wang, D. Schuurmans, M. Bosma, F. Xia, E. Chi, Q. V. Le, D. Zhou. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems 35, 24824-24837 (2022). [↩]

- S. Yao, J. Zhao, D. Yu, N. Du, I. Shafran, K. Narasimhan, Y. Cao. ReAct: Synergizing reasoning and acting in language models. International Conference on Learning Representations (2022). [↩]

- M. Li, Y. Li, Y. Li, J. Chen, Y. Zhang, X. Zhang, Y. Li, Y. Li. Towards visual text grounding of multimodal large language model. arXiv.org (2025). [↩]

- J. Koh, R. Salakhutdinov, D. Fried. Grounding language models to images for multimodal inputs and outputs. arXiv.org (2023). [↩]

- arXiv. Prompt engineering and its implications on the energy consumption of large language models. https://arxiv.org/html/2501.05899v1 (2025). [↩]

- Z. Yang, Z. Dai, Y. Yang, et al. XLNet: Generalized autoregressive pretraining for language understanding. Advances in Neural Information Processing Systems 32, 5754-5764 (2019). [↩]

- E. Kreiss, et al. Narrative inconsistencies and repetition in long-form language model outputs. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (2023). [↩]

- M. Roemmele, A. S. Gordon. Improving story generation evaluation with realistic corpora. Proceedings of the Workshop on Storytelling (2018). [↩]

- Y. Hosni. Prompt engineering best practices: Iterative prompt development. https://pub.towardsai.net/prompt-engineering-best-practices-iterative-prompt-development-22759b309919 (2024). [↩]

- E. Levi, E. Brosh, M. Friedmann. Intent-based prompt calibration: Enhancing prompt optimization with synthetic boundary cases. arXiv.org (2024). [↩]

- arXiv. From pen to prompt: How creative writers integrate AI into their writing practice. https://arxiv.org/html/2411.03137v2 (2024). [↩]

- J. P. Kincaid, L. J. Delionbach. Validation of the Automated Readability Index: A follow-up. Human Factors 15, 17-20 (1973). [↩]