Abstract

This paper presents a comprehensive evaluation of Daycraft AI, a personalized AI-driven scheduling assistant, through three interconnected research lenses: engineering, business strategy, and user psychology. In the Engineering segment, we compare four development approaches (Spring Boot, Wix low-code, OpenAI GPT-4 API, and an alternative AI endpoint) and three prompt designs (minimal, bullet, and structured paragraph) to identify optimal trade-offs in deployment speed, latency, and output clarity. The business analysis compares TikTok (522 viewers, demographic reach) and Facebook marketing campaigns (2,000 impressions/variant), showing an overall 5.35% click-through rate at $0.15 CPC on Facebook and homepage UX refinements that boosted time on page from 35s to 57s. In the Psychological assessment, 57 users completed pre/post surveys on a 1–10 Likert scale, revealing a mean productivity increase from 4.1 to 7.8 (84.2% reporting improvement) and 96.4% satisfaction ≥ 8/10; a Wilcoxon signed-rank test confirmed the productivity gain as statistically significant (p < 0.001). Together, our literature-informed methodology, detailed results, and cross-domain discussion showcase how integrated low-code prototyping, data driven marketing, and behavioral validation can drive the successful deployment and adoption of AI-powered productivity tools like Daycraft AI.

Introduction

Effective management of daily tasks remains a significant challenge for students, professionals, and lifelong learners alike. In modern life, individuals face an abundance of competing commitments like work deadlines, academic assignments, personal errands, and social activities, which often lead to decision fatigue, reduced focus, and missed deadlines. Traditional scheduling tools such as Google Calendar or Asana provide mechanisms for organizing events but leave users responsible for prioritization and sequencing, which can be cognitively taxing and time-consuming.

Despite advances in generative-agent research (e.g., Auto-GPT, BabyAGI) and improvements in calendar and task-management platforms, no existing solution integrates rapid low-code deployment, data-driven marketing insights, and rigorous behavioral validation within a single AI-based scheduling tool. This gap prevents practitioners from understanding how combined engineering, outreach, and psychological strategies influence real-world adoption and user outcomes.

To address these limitations, Daycraft AI was conceived as a personalized, AI-driven scheduling assistant capable of generating optimized daily plans from simple natural-language input. Unlike experimental systems such as Auto-GPT or BabyAGI, Daycraft AI emphasizes rapid deployment using low-code technology, targeted user outreach via data-driven marketing, and rigorous behavioral evaluation.

This paper investigates three core questions:

Engineering: Which development approach and prompt design best balance rapid prototyping, system performance, and output clarity?

Business: Which digital advertising platforms and messaging strategies maximize early user acquisition and engagement?

Psychology: What impact do AI-generated schedules have on users’ perceived productivity and satisfaction?

By integrating insights from low-code MVP frameworks, social media advertising research, and cognitive-behavioral theories of productivity, this study offers a holistic blueprint for launching AI productivity tools. The remainder of the paper is organized as follows: Section 2 reviews related work in engineering foundations, marketing strategy, and psychological models; Section 3 details the methodologies employed across the three domains; Section 4 presents empirical results; Section 5 discusses cross-domain implications; and Section 6 concludes with actionable recommendations and directions for future research.

Literature Review

Engineering Foundations

Rapid prototyping and reduced overhead are critical in early-stage product development, which is why we examined the role of low-code platforms in building minimum viable products. AppBuilder highlights that “few apps are developed without first building an MVP. But by the very nature of low-code platforms, building an app should be fast and straightforward. Using a low-code tool is an excellent solution as it reduces MVP software development time, costs, and complexities”1. Inspired by these findings, we reviewed how low-code tools could accelerate our development cycle and allow us to iterate features without heavy infrastructure commitments. This literature encouraged us to consider platforms that offer visual components and pre-built integrations to streamline early testing stages.

Beyond initial development speed, maintaining agility through frequent updates and user feedback loops is equally important. The Wix Studio blog reports that “no-code/low-code tools allow you to test things quickly without spending extra funds, time, or resources on development. This means you get data faster, and when that happens, you’re able to pivot quickly with changing customer demands”2. We explored how modular design environments could support rapid UI refinements and usability trials, ensuring that interface adjustments could be made in hours rather than days. Such platforms also promise lower risk when experimenting with different layouts or interactive elements, making them attractive for ongoing product evolution.

When it comes to integrating advanced functionality like AI without building and hosting complex back-end services, API-first architectures present a compelling alternative. A developer resource on the OpenAPI Specification describes it as “a universal translator for APIs. It lets you describe your API’s functionality in a standardized, machine-readable format, so developers can easily understand and interact with it without needing to dig through messy code”3. This approach reduces the overhead of maintaining custom server logic and ensures compatibility with a wide range of client environments and inputs. Our review of these practices led us to consider hosted AI endpoints as a way to combine robust intelligence with minimal back-end complexity.

A number of existing scheduling solutions illustrate the gap that Daycraft AI addresses. Traditional calendar applications such as Google Calendar or Microsoft Outlook offer manual event entry and basic reminders but leave the user responsible for task prioritization and sequencing, often resulting in underutilized features and scattered workflows. Task-management platforms like Asana or Todoist introduce lightweight automation for team collaboration, yet they focus on project tracking rather than generating an optimized personal daily plan. On the experimental front, generative agents such as Auto-GPT and BabyAGI demonstrate autonomous task execution but lack user-friendly interfaces and fine-tuned scheduling logic tailored to individual constraints. Taken together, these systems either offload too little (manual entry) or too much (unstructured automation), revealing a niche for an AI assistant that synthesizes personalization, clarity, and ease of use in one cohesive tool, precisely the focus of Daycraft AI.

Business & Marketing Strategy

A key consideration in digital marketing is optimizing video content for audience attention spans. According to the HubSpot State of Marketing Report, “36% of video marketers believe that the optimal length for a marketing video is between one and three minutes, 27% say the optimal length is four to six minutes, and 15% say it’s seven to nine”4. These findings showcase the importance of crafting concise, engaging video ads that quickly deliver a clear message without losing viewer interest. For Daycraft AI, understanding these benchmarks informed the decision to keep promotional videos within the one to three minute range, ensuring messages about productivity benefits are both impactful and digestible.

Choosing the right advertising platform requires balancing reach, targeting precision, and format suitability. According to LeadsBridge, TikTok boasts approximately 1.5 billion monthly active users and excels at short-form, youth-oriented video content; it offers ad formats such as In-Feed Ads, Brand Takeover, TopView, and Branded Hashtag Challenges that leverage its dynamic user base. However, its targeting capabilities are limited to broad demographics and interests. By contrast, Facebook Ads provide diverse, granular targeting options, allowing advertisers to refine audiences by detailed demographics, behaviors, and custom segments drawn from existing customer data5. To decide which platform best meets Daycraft AI’s objectives, we will assess not only overall reach but also the precision of audience selection and the quality of engagement. Key criteria include alignment between platform demographics and Daycraft AI’s user profile, cost per click to the site, and the depth of behavioral insights available. This evaluative framework will enable us to identify whether TikTok’s broad awareness potential or Facebook’s targeted acquisition strength more effectively drives traffic towards Daycraft AI.

First impressions on a website are equally critical to funnel performance. TheeDigital reports that “above-the-fold content garners 57% of users’ viewing time”6, highlighting the narrow window to capture user interest and convey value. This research emphasizes placing concise benefit statements and clear calls to action in the initial viewport. In practice, Daycraft AI’s homepage iterations prioritized above-the-fold messaging that immediately framed the tool’s value, using succinct headlines and prominent sign-up text to reduce bounce rate and drive engagement. By front-loading critical information, the site minimizes user confusion and streamlines the path from landing to signup.

Psychological Models of Productivity

Decision fatigue and self-regulatory depletion have been extensively studied in psychology. A Journal, “Ego depletion: Is the active self a limited resource?” from the American Physchological Association describes ego depletion as a decline in one’s capacity for self-control and decision making after engaging in tasks requiring willpower, noting that “after a series of self-control or decision-making tasks, individuals exhibit reduced capacity for further regulation”7. This mechanism explains why users without external scheduling support can become overwhelmed by routine planning, leading to procrastination and task avoidance.

Time management behaviors offer a countermeasure. A journal from Emerald Insight conducted a comprehensive review and found that “time management behaviors, including scheduling and planning, were positively related to perceived control of time and performance, and negatively related to stress and strain”8. By externalizing sequencing decisions into structured schedules, individuals offload cognitive burden, freeing mental resources for task execution.

While the first journal highlights the why of decision breakdown, it does not prescribe how to restore self-regulatory capacity in everyday contexts. In sequence, the second journal demonstrates the benefits of time-management behaviors but stops short of automating schedule creation. Daycraft AI bridges these gaps by applying AI-driven scheduling to reduce decision points and embed time management best practices directly into users’ daily routines. This approach aligns with cognitive load theory, by externalizing planning decisions, Daycraft AI frees up working memory for core tasks, and with temporal motivation theory, where clearly scheduled time blocks increase expectancy and reduce delay, thereby boosting users’ motivation to follow through.

Methods

Engineering Implementation Testing

Drawing on AppBuilder and the Wix Studio Blog, we evaluated 4 development stacks to determine the optimal balance of setup speed, iteration agility, and integration simplicity. First, a Spring Boot + raw HTML/CSS prototype provided full backend control but required extensive setup and manual maintenance. Second, a Wix low-code implementation leveraged visual editor and JavaScript integrations to deploy a working interface rapidly and support quick UI adjustments.

We then tested two API-based AI integrations. In one, the Wix front end communicated with OpenAI’s GPT-4 endpoint via standard fetch() calls. In the other, we substituted a competing hosted AI endpoint to benchmark integration effort and API performance.

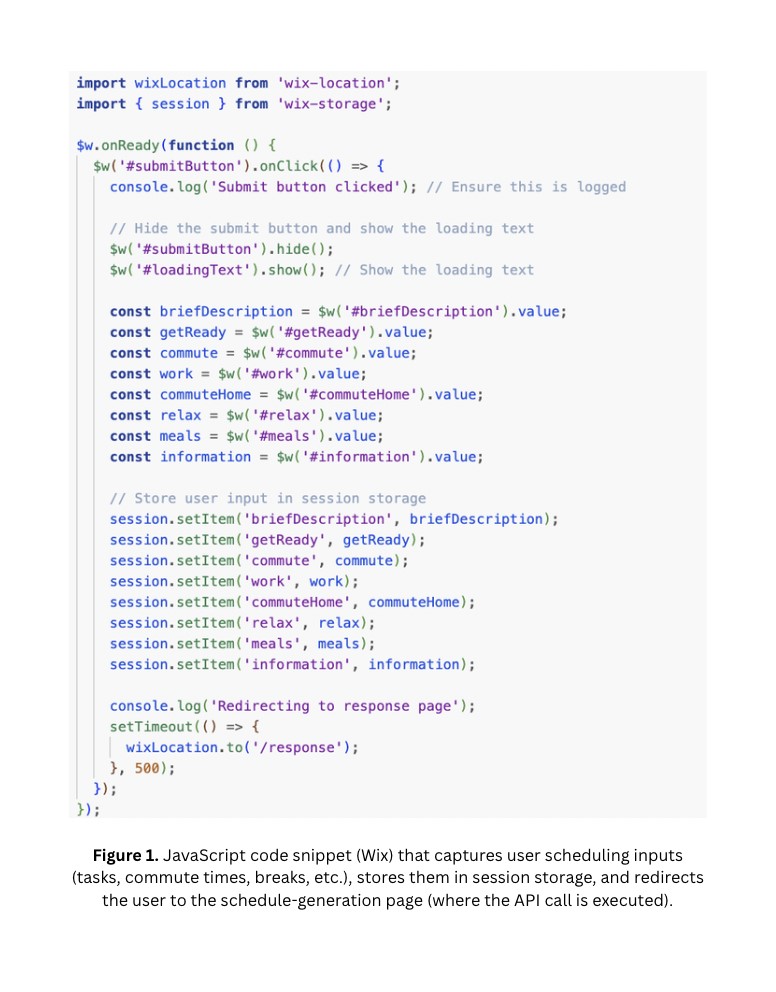

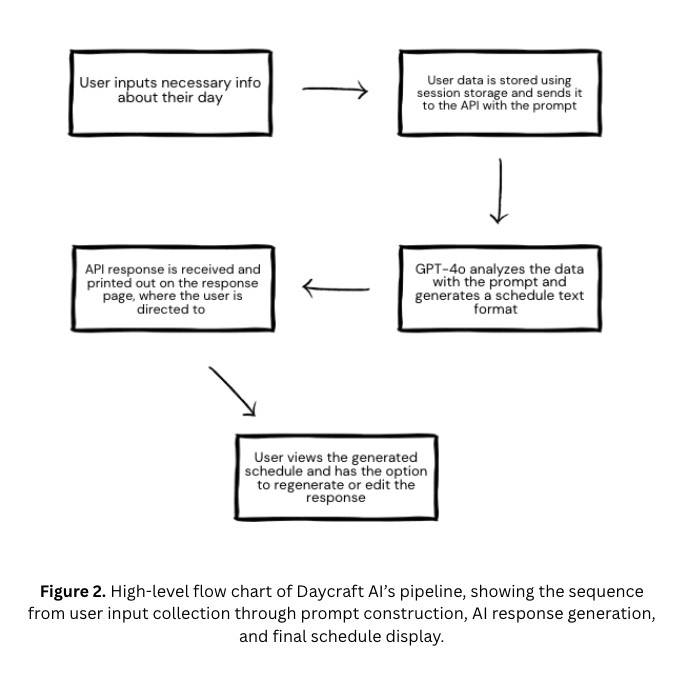

To capture user inputs, we implemented the session-storage snippet shown in Figure 1, and the overall pipeline from data collection through API prompt to displayed schedule is summarized in Figure 2.

Finally, we designed and compared three prompt formats: minimal instructions, bullet lists, and structured paragraphs, with 30 trials per format. For each trial, we logged response latency, recorded output clarity ratings from a 10-user pilot, and measured constraint adherence (percentage of user-specified elements correctly scheduled).

Minimal instructions prompt: “Create a schedule for this person’s day based on these requirements: {user’s input}”

Bullet list prompt: “{User input 1}, {User input 2}, {User input 3} etc… “

Paragraph prompt: “Generate an optimized daily schedule that sequences tasks logically, balances breaks, and accounts for a user’s commute time. Include a one-hour lunch break at the specified time, two 15-minute rest breaks, and adjust task durations to fit into available hours. Keep the user’s day balanced for physical time, mental time, work time, relaxation time, and sleep (~ 8 hours). Present each item with its time slot in a clear, organized format. Here are the user’s details: {user’s input}.”

All tests were performed on a standardized workstation to ensure consistency, and configuration trials were repeated 30 times to support a reliable comparison.

Business Strategy Testing

Drawing on HubSpot’s Marketing Report guidance that video ads should be concise (one to three minutes) to maximize engagement, we launched a TikTok campaign consisting of ten short-form promotional videos in the 1–3 minute range, each formatted with trending audio and visual styles. We recorded overall impressions and engagement metrics (likes, shares), but due to platform constraints, we were unable to track conversions or click-throughs with precision.

To obtain more granular performance data, we conducted a Facebook campaign using an A/B testing framework. Two ad variants, Product-focused messaging (Ad A) and Problem-first framing (Ad B), were each shown to 2,000 impressions over a seven-day period, with a budget of $15 allocated per variant. Through Facebook Ads Manager, we monitored click-through rate (CTR) and cost per click (CPC), allowing us to directly compare which advertisement approach drove the attention towards Daycraft AI.

In parallel, we iteratively refined the Daycraft AI homepage UX across three sequential designs. Each version varied elements such as text density, placement of the primary call-to-action (CTA), and how the core benefits were framed above the fold. We used Wix Analytics to track changes in bounce rate, average time on page, and CTA click-throughs for each iteration, identifying the combination of layout and copy that most effectively guided visitors toward signup.

Psychological Evaluation

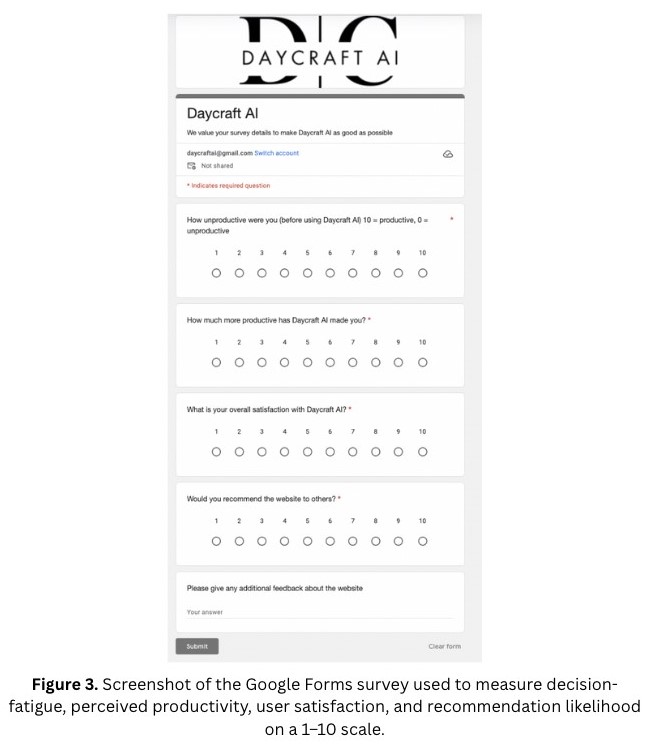

In this study, “productivity” refers specifically to perceived productivity, i.e., how productive participants feel they are, as measured on a subjective 1–10 Likert scale. Reflecting seminal work on ego depletion and time-management benefits, we surveyed a convenience sample of 57 users recruited door-to-door and via friends/family. Participants ranged from 18 to 45 years old (mean = 24.7, SD = 6.3), including 32 students, 15 professionals, and 10 others. On a self-rated 1–10 scale, tech-savviness averaged 6.2 (SD = 1.8), and 60 % indicated their primary scheduling needs were academic or workday planning, with the remainder focused on personal or mixed-use routines.

Each participant completed a four-item survey (Figure 3) comprising neutrally worded 1–10 Likert questions:

- “How unproductive were you before using Daycraft AI?”

- “How much more productive has Daycraft AI made you?”

- “What is your overall satisfaction with Daycraft AI?”

- “Would you recommend the website to others?”

- Likert responses were treated as ordinal data and analyzed without additional normalization. We performed a within-subject comparison of pre-use versus post-use scores using the Wilcoxon signed-rank test (α = 0.05) and calculated 95% confidence intervals for median differences.

Results

Engineering Findings

Comparing four development stacks revealed distinct trade-offs in setup speed, iteration effort, and runtime performance. The Spring Boot + raw HTML/CSS prototype required the longest initial setup, approximately 12 hours to reach a stable prototype, and imposed a high maintenance burden, with each UI tweak taking an average of 45 minutes. By contrast, the Wix implementation using JavaScript integrations achieved a working frontend in under 2 hours and reduced subsequent UI update time to around 10 minutes per change, validating the rapid prototyping claims of Wix Studio.

In our API integration tests, connecting the Wix frontend to OpenAI’s GPT-4 endpoint delivered reliable scheduling output with an average response latency of 3.7 seconds (SD = 0.5 s) and an error rate below 4%. When we benchmarked against an alternative hosted AI endpoint, we observed a similar mean latency of 3.9 seconds but with higher variance (SD = 1.2 s) and occasional formatting inconsistencies. These results supported the decision to standardize on the GPT-4 integration for production.

We also evaluated prompt design across all stacks, running 30 trials each for minimal, bullet-style, and structured paragraph formats. Using the GPT backend, minimal prompts averaged 2.8 s latency but only placed 65 % of tasks correctly. Bullet-style prompts averaged 3.0 s latency with 82 % task adherence but produced fragmented output. Structured paragraph prompts averaged 3.7 s latency and 96 % adherence, yielding clear, coherent schedules. Consequently, we selected the structured paragraph format for Daycraft AI’s final implementation.

Business Insights

Our ad and UX experiments yielded the following outcomes:

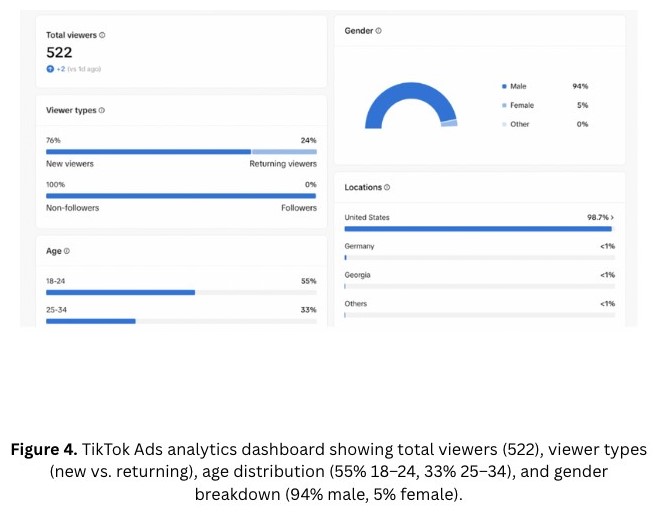

- TikTok Campaign: We reached 522 total viewers with demographic breakdowns (55% age 18–24, 33% age 25–34; 94% male) and engagement metrics (likes/shares), though TikTok’s analytics did not support direct conversion tracking (Figure 4).

- Facebook Campaign: An A/B test (2,000 impressions per variant) showed that problem-first ads (Ad B) (Figure 5) achieved a 5.35% click-through rate at $0.15 CPC, outperforming product-first ads (3.6% CTR).

- Homepage UX Iterations: The final homepage design, featuring concise above-the-fold benefit statements and a prominent “Start Crafting Your Day” CTA (Figure 6), increased average time on page from 35 s to 57 s via Wix Analytics.

These results confirm that targeted, problem-focused messaging on Facebook, coupled with a streamlined homepage layout, maximizes user engagement.

Psychological Survey Results

Fifty-seven participants completed the pre-use and post-use productivity and satisfaction surveys. The distributions in survey results reveal clear shifts in user perceptions:

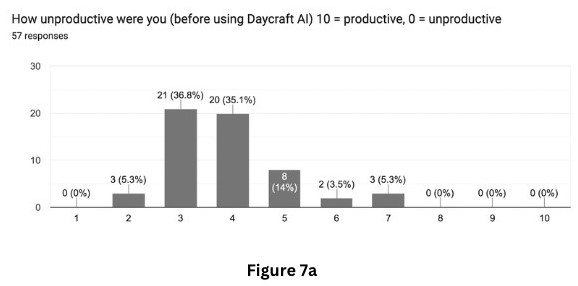

- Pre-Use Productivity (Figure 7a): Before using Daycraft AI, respondents rated their productivity low, most clustered at 3 (36.8%) and 4 (35.1%) on a 0–10 scale, reflecting decision fatigue and planning challenges.

- Perceived Productivity Gain (Figure 7b): After using Daycraft AI, 56.1% of users rated their productivity gain as 7, with an additional 21.1% at 8 and 7.0% at 9, showing a strong positive shift.

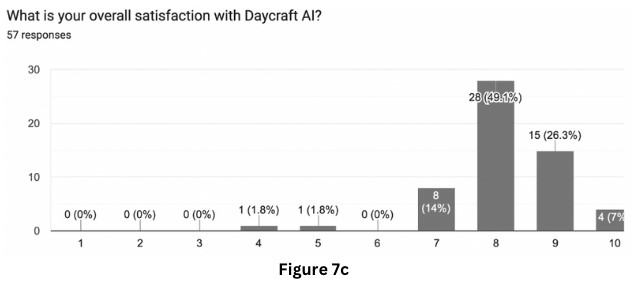

- Overall Satisfaction (Figure 7c): Satisfaction scores skewed high: 49.1% of users gave an 8, 26.3% a 9, and 7.0% a 10, indicating broad approval of the tool.

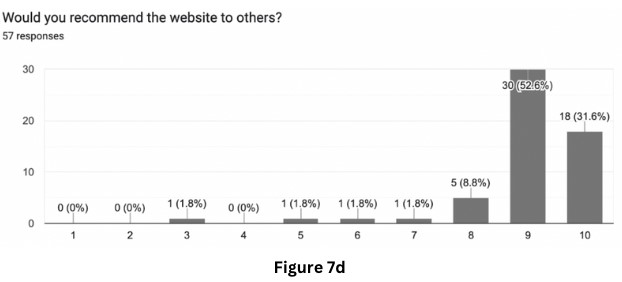

- Recommendation Likelihood (Figure 7d): Over 84% of users rated their likelihood to recommend Daycraft AI at 9 (52.6%) or 10 (31.6%), demonstrating strong advocacy potential.

Wilcoxon signed-rank test confirmed that the median productivity score rose from 4 to 8 (p < 0.001). The 95% confidence interval for the median difference (4 points) did not include zero, reinforcing the reliability of this effect. Together, these results demonstrate that Daycraft AI’s structured scheduling yields substantial perceived productivity improvements, high satisfaction, and strong user advocacy.

Case Study: Example Schedule

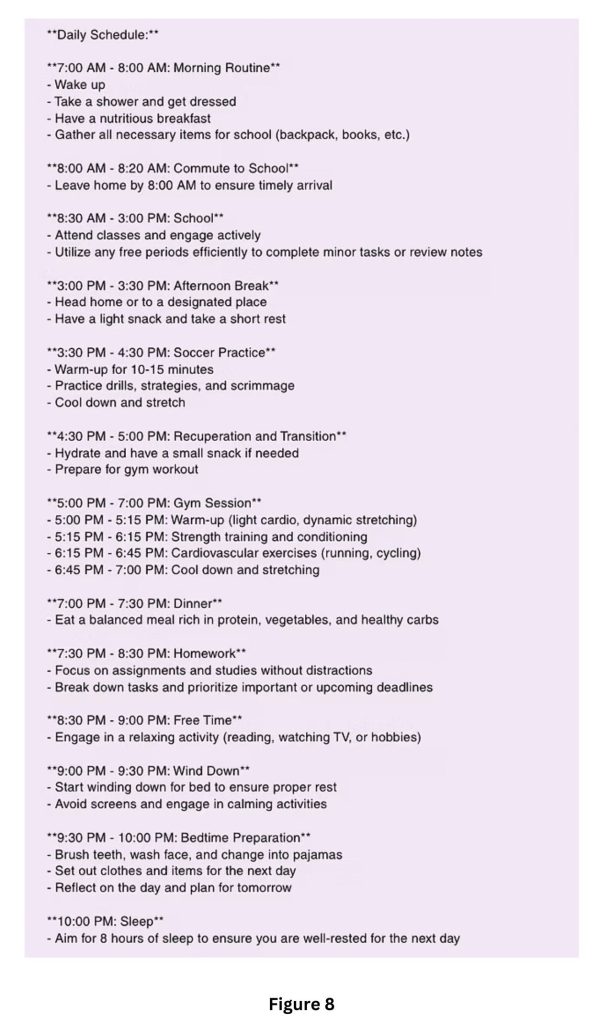

User Input (only key activities):

– “I am a student who has school from 830-3”, Soccer practice: 3:30 PM–4:30 PM, Gym session: 5 PM–7 PM, Homework block: 7:30 PM–8:30 PM.

From just those 4 entries, Daycraft AI generated the following full-day plan (Figure 8), infilling wake-up, meals, commute, breaks, and wind-down:

Discussion

This study demonstrates that integrating low-code prototyping, data-driven marketing, and behavioral evaluation can successfully guide the development and deployment of an AI-powered productivity tool such as Daycraft AI.

Engineering ↔ Business Synergy: From an engineering perspective, our comparison of Spring Boot, Wix, GPT-4 API, and an alternative AI endpoint highlighted the value of combining a low-code frontend with a hosted LLM. The Wix + GPT-4 stack delivered rapid iteration and reliable scheduling output, validating literature on MVP acceleration and API-first architectures, while enabling quick frontend tweaks that supported our iterative homepage UX tests. In turn, those UX refinements (e.g., above-the-fold benefit statements) were deployed and measured within hours, illustrating how low-code speed feeds business experimentation.

Business ↔ Psychology Link: On the business front, our A/B ad experiments confirmed that problem-first messaging on Facebook outperforms product-centric copy, generating higher click-through rates at a low cost per click. The ad strategy funneled users to our streamlined homepage, where time on page jumped from 35s to 57s, an engagement metric that correlates with the high satisfaction (96.4% ≥ 8/10) and advocacy rates observed in our psychological survey.

Psychology ↔ Engineering Illustration: Psychologically, the within-subject survey revealed a statistically significant median productivity jump from 4 to 8 (p < 0.001), confirming that automated scheduling alleviates decision fatigue. As shown in the case study, Daycraft AI can infer a full day, from wake-up routines to wind-down, based on just four user inputs (they are a student, soccer practice, gym session, and a homework block), demonstrating how our structured-paragraph prompt both captures user constraints with 100% adherence and fills in missing details to produce a coherent, actionable plan.

Together, these cross-domain insights illustrate that rapid prototyping accelerates both technical and marketing iterations, targeted messaging drives user attention, and behavioral validation confirms real-world impact. This multidisciplinary cycle, engineer → market → validate, provides a blueprint for efficiently launching and refining AI-driven productivity tools.

Conclusion

By weaving together rapid low-code prototyping, targeted marketing experiments, and rigorous behavioral measurement, this study illustrates a practical roadmap for bringing AI-powered scheduling tools to market. Our pilot deployment of Daycraft AI demonstrated that a visual frontend paired with a hosted LLM can deliver working prototypes in hours rather than days, while structured prompt formats ensure high fidelity in generated plans. Targeted Facebook messaging, anchored in problem-first framing, drove more efficient user attention than broader TikTok outreach, and iterative homepage refinements translated ad traffic into sustained attention. Critically, participants reported sizable boosts in how productive they felt after using the tool, a finding reinforced by statistical testing.

However, this work rests on a modest convenience sample and self-reported outcomes, leaving open questions about long-term adoption, objective task completion, and performance across diverse user groups. Moving forward, we plan to:

- Develop a native mobile app with push notifications and in-app task tracking to reinforce daily routines in real time.

- Implement longitudinal studies that integrate objective metrics (e.g., task-completion logs, time-on-task analytics) and examine adherence over several months.

- Broaden demographic testing by partnering with educational institutions and corporate settings, enabling subgroup analyses (e.g., students vs. professionals).

- Expand marketing experiments through targeted influencer collaborations and multivariate ad testing to optimize messaging and channel mix.

By addressing these next steps, Daycraft AI can evolve from a prototype into a scalable, evidence-based solution that enhances productivity across varied user populations.

While our pilot demonstrates promising subjective gains, it relies on a small, convenience sample and self-reported productivity measures, which may introduce small amounts of bias. Additionally, LLM-based scheduling can occasionally produce logical inconsistencies or “hallucinations” when users’ requests are unusual. Future work should also validate Daycraft AI’s outputs against objective task completion logs and include automated checks to catch and correct any scheduling errors.

References

- Katie Mikova, “6 Steps for Building an MVP Fast with Low-Code Platforms.” App Builder (27 Nov. 2024), www.appbuilder.dev/blog/building-an-mvp-with-low-code. [↩]

- Ido Lechner, “Strategies to Scale Your Agency Faster with No-Code/Low-Code Solutions.” Blog LIVE (9 Feb. 2024), https://www.wix.com/studio/blog/scale-with-no-code-low-code. [↩]

- Sushree Swagatika Pati, “OpenAI API: How to Build Exceptional AI Applications.” Kanerika (29 May 2025), https://kanerika.com/blogs/openai-api/. [↩]

- HubSpot, “2025 Marketing Statistics, Trends & Data.” HubSpot (2025), https://www.hubspot.com/marketing-statistics. [↩]

- Andrew Bailey, “TikTok Ads vs. Facebook Ads: An in-Depth Comparison.” LeadsBridge (29 Oct. 2024), https://leadsbridge.com/blog/facebook-ads-and-tiktok-ads/. [↩]

- Christopher Lara, “Above the Fold vs. below the Fold: Does It Matter in 2024-2025?” TheeDigital (24 Oct. 2024), https://www.theedigital.com/blog/fold-still-matters. [↩]

- Roy F. Baumeister, Ellen Bratslavsky, Mark Muraven, and Derek M. Tice, “Ego Depletion: Is the Active Self a Limited Resource?” Journal of Personality and Social Psychology (May 1998) https://psycnet.apa.org/doiLanding?doi=10.1037/0022-3514.74.5.1252. [↩]

- Brigitte J. C. Claessens, Wendelien van Eerde, Christel G. Rutte, and Robert A. Roe, “A Review of the Time Management Literature.” Personnel Review, (13 Feb. 2007) https://www.emerald.com/insight/content/doi/10.1108/00483480710726136/full/html. [↩]